Data Migration between MySQL and Neo4j

Principal Consultant, GraphAware

5 min read

Many organizations that are looking at modeling highly connected data to add business intelligence or analytical capabilities using Neo4j already have a database in place.

Introducing a graph database into the mix does not have to be a disruptive process. As with any technology, understanding its place and contribution to the entire system is key to determining how the pieces fit together.

Dealing with Two Data Stores

- The existing database, MySQL in our case, would continue to be the primary system of record.

- Neo4j would be a secondary data store with a much smaller subset of data, used in two specific ways:

- OLTP mode, where it’s essential to the business to have some questions answered in near real time and therefore have certain sets of data as current as possible.

- Batch mode, where some data is collected and processed in a delayed manner.

This implies that first, we need a way to bring the new Neo4j system up to speed with data already collected over time in our primary RDBMS, and secondly, a way to keep them both in sync once the Neo4j system is up and running.

Instead of exporting data and then importing it into Neo4j for the initial load, and then planning for keeping the databases in sync, we decided to first not worry about how the data would be supplied and just design our application without assumptions about the data source.

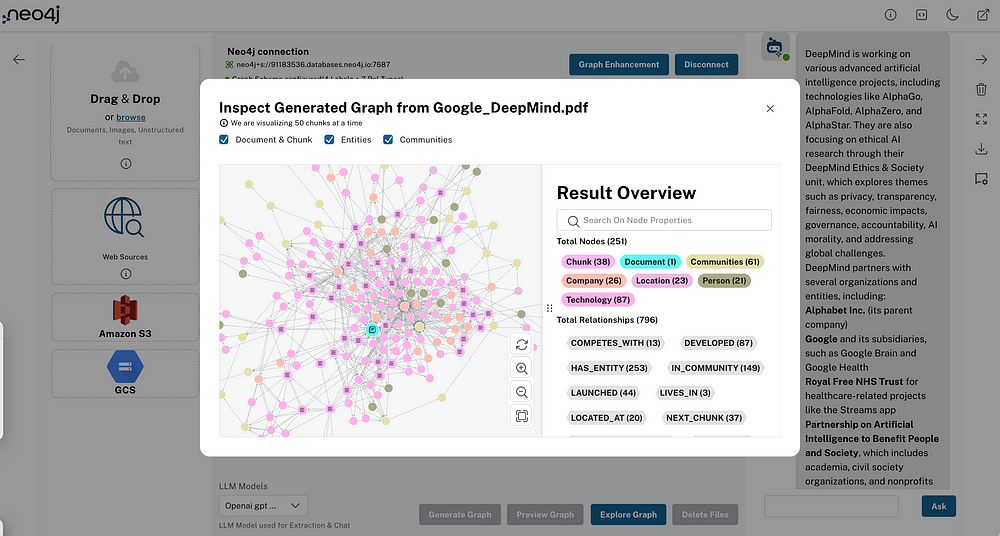

The Data Model

For the purpose of this post, I will use an extremely simplified set of entities to better describe the process. Note that the design and code are put together to illustrate the example and it is not necessarily working code.

Assume that we wish to model Customers buying Products sold by Merchants.

We set up POJOs representing the domain objects – a Product, Merchant and Customer class.

Their corresponding DAO’s take care of persistence to and from Neo4j. We used mutating Cypher to store a representation of the object as nodes and relationships, in a few cases dropping down to the Neo4j API for complex objects.

Every entity ties back to the RDBMS via an id which is the primary key in the system of record and it is this key that is indexed.

The graph model for this looks like:

Once that part is done, the application is testable independent of the data source.

Initial Import

For the initial import of data, the only thing that matters is to be able to transform the data received into the domain objects and save them.

Options include SQL queries to fetch only the data you need, or an API exposed by the primary system – if it exists – or an exported set of data.

Let’s use SQL:

SELECT id, name from customers where Customer customer=new Customer(rs.getInt(“id”), rs.getString(“name”)); customer.save();

// Creates or updates a customer node. Indexes the id if it is created.

SELECT id, name from products where Product product=new Product(rs.getInt(“id”),rs.getString(“name”)); product.save();

// Creates or updates a product node. Indexes the id if it is created.

SELECT id, name, type from merchants where Merchant merchant=new Merchant(rs.getInt(“id”), rs.getName(“name”)); merchant.setType(rs.getString(“type”)); merchant.save();

// Creates or updates a merchant node. Creates a “type” node if it does not already exist. Creates a relationship between the merchant and type node. Indexes the type and merchant ID if they are created.

SELECT customer_id,product_id,purchase_date from customerPurchases Customer customer=repository.getById(rs.getInt(“customer_id”)); customer.purchaseProduct(rs.getInt(“product_id”),rs.getDate(“purchase_date”);

// Creates a relation from the customer to the product and sets the purchase date as a property on the relation.

Keeping Data in Sync

Once the data is imported and both systems are now running, the next task is to keep the data in sync.

Depending on what you plan to do with Neo4j, you might decide that periodic imports of data serve the purpose and you might run a scheduled process to do essentially what we did in the initial import.

Or, you might need to know about things as they happen. In that case, an event-based integration is a simple, but powerful solution.

As “events” take place in the primary system such as a new product created, or a customer purchased a product, the event is published and the Neo4j application picks it up and deals with it. The link between the two systems can be as simple as a messaging queue where the content of the message might be custom content, the result of an API call or anything that shares the information necessary for the secondary system to make sense of.

Whatever that content, again, all we need is the ability to pick it up, parse it and call business methods on our domain.

The Result

The applications are loosely coupled and the problem of consuming data from multiple sources in multiple formats is reduced to a simple problem of parsing.

We found that this approach worked well. The import can take a bit of time since it is transactional, but considering that the initial import is not a frequent process, the wait is worth not introducing another data import tool. Once you get past that point, the event-based sync works nicely.

As mentioned at the start of this article, understanding the pattern of data sync for your application is very important to determine how to go about it. If you need a one-time migration of data, the approach above might be overkill and you should consider some of the excellent tools available such as the Batch Importer , GEOFF, or the REST batch API if using the Neo4j Server.

Also, Spring Data is something to look into if you wish to use annotated automatically-mapped entity classes.

Want to learn more about graph databases? Click below to get your free copy of O’Reilly’s Graph Databases ebook and discover how to use graph technologies for your application today.