Quickly Build a ReAct Agent With LangGraph and MCP

Consulting Engineer, Neo4j

11 min read

Learn how to incorporate local and MCP-hosted tools into your agents

A common question I get working with clients is how to set up an agent with MCP tools. In this blog, I’ll detail a simple LangGraph agent that uses both a PyPI-hosted MCP server and locally defined tools with Python. While this example contains a Text2Cypher agent built to connect to a Neo4j graph database, the concepts are applicable to any agents or MCP servers.

If you’d like to just pull the repo yourself and get started, there’s a detailed README that walks through how to deploy the agent locally. Otherwise, I’ll provide a bit more detail here.

You can install the required packages with either uv or pip, although uv is preferred. This agent is built using an OpenAI LLM (and, therefore, requires an OpenAI API key), but it’s simple to swap this for your LLM of choice.

Please note that you don’t need your own Neo4j database to run this demo agent. The provided connection credentials are for a publicly accessible demo movies database.

You can run and interact with the agent via the command line.

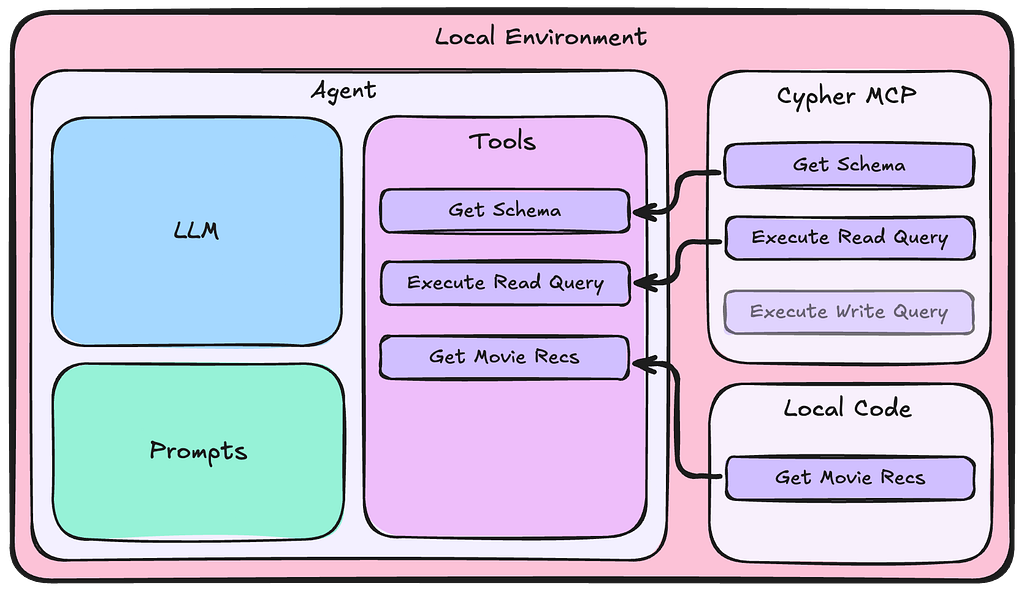

Agent Architecture

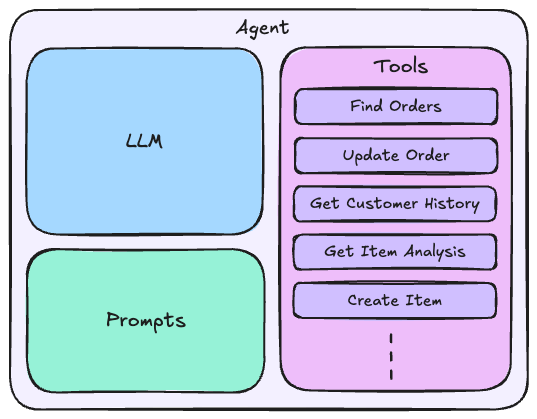

Agents have three primary components: the LLM, prompts, and tools.

Tools may be provided by MCP servers or defined locally within the code. Assuming that the tools have been well documented, it’s the responsibility of the prompts and LLM to effectively use these tools.

The prompts should provide guidance on how the agent should behave and any specific instructions it should follow.

The LLM should be capable of intelligently deciding which tools to execute (and in what order, if necessary) to effectively gather the appropriate context required to answer the input questions.

Neo4j Cypher MCP Server

We’ll be using the Neo4j Cypher MCP server for some of our agent’s tools. Cypher is the query language used by Neo4j. This server provides three tools:

get_neo4j_schemaread_neo4j_cypherwrite_neo4j_cypher

Our agent will be capable of generating Cypher queries on the fly to address user questions about movies. We don’t need it to modify the data, so we’ll only use the get_neo4j_schema and read_neo4j_cypher tools from this server.

Check out the documentation to learn more about this MCP server.

Agent Code

The code is found in the agent.py file. It builds a ReAct agent using LangGraph with tools from the Neo4j Cypher MCP server and another locally defined tool. The agent can generate novel Cypher queries or use its recommendation search tool to answer user questions about movies. You can interact with this agent via the command line.

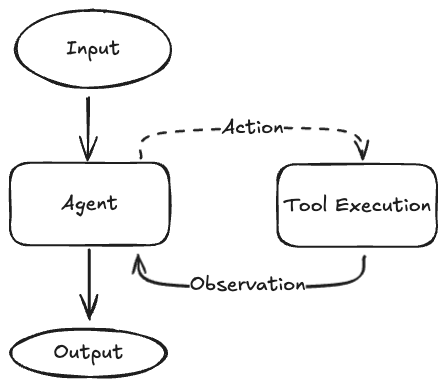

A ReAct agent follows a simple process:

- The agent receives the input question.

- The agent decides which tool to call in order to answer the question.

- The agent executes the chosen tool.

- Tool execution results are appended to the context.

- The agent analyzes the updated context.

- Repeat steps 2–5 until the agent has the proper context to answer the question.

- Return final response to the user.

Imports and Setup

We first import the required libraries. We’ll primarily use LangChain and LangGraph for our agent. The MCP implementation will be handled by the MCP and LangChain MCP Adapters libraries.

import asyncio

import os

from typing import Any

from dotenv import load_dotenv

from langchain_core.messages import AnyMessage

from langchain_core.messages.utils import count_tokens_approximately, trim_messages

from langchain_core.tools import StructuredTool

from langchain_mcp_adapters.tools import load_mcp_tools

from langgraph.checkpoint.memory import InMemorySaver

from langgraph.prebuilt import create_react_agent

from langgraph.prebuilt.chat_agent_executor import AgentState

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

from neo4j import GraphDatabase, RoutingControl

from pydantic import BaseModel, Field

if load_dotenv():

print("Loaded .env file")

else:

print("No .env file found")Local Tool Definition

The next step is to define our local tool. This is a movie recommendations search tool specific to our use case. This tool definition is done in three steps.

First, we define the actual function to execute if the tool is chosen. This is a simple Python function that takes movie_title, min_user_rating, and limit as arguments and returns a Python dictionary containing recommendation information. The arguments will be injected as parameters into a Cypher query that uses graph traversals to identify recommended movies.

def find_movie_recommendations(

movie_title: str, min_user_rating: float = 4.0, limit: int = 10

) -> list[dict[str, Any]]:

"""

Search the database movie recommendations based on movie title and rating criteria.

"""

query = """

MATCH (target:Movie)

WHERE target.title = $movieTitle

MATCH (target)<-[r1:RATED]-(u:User)

WHERE r1.rating >= $minRating

MATCH (u)-[r2:RATED]->(similar:Movie)

WHERE similar <> target

AND r2.rating >= $minRating

AND similar.imdbRating IS NOT NULL

WITH similar, count(*) as supporters, avg(r2.rating) as avgRating

WHERE supporters >= 10

RETURN similar.title, similar.year, similar.imdbRating,

supporters as people_who_loved_both,

round(avgRating, 2) as avg_rating_by_target_lovers

ORDER BY supporters DESC, avgRating DESC

LIMIT $limit

"""

driver = GraphDatabase.driver(

os.getenv("NEO4J_URI"),

auth=(os.getenv("NEO4J_USERNAME"), os.getenv("NEO4J_PASSWORD")),

)

results = driver.execute_query(

query,

parameters_={"movieTitle": movie_title, "minRating": min_user_rating, "limit": limit},

database_=os.getenv("NEO4J_DATABASE"),

routing_=RoutingControl.READ,

result_transformer_=lambda r: r.data(),

)

return resultsNext we define a Pydantic object that represents the input arguments to our tool. This will communicate what information is required from the agent and validate any provided input before passing it to the tool.

For example, the min_user_rating argument has default, ge, and le properties that ensure a few things:

- If no value is provided, 4.0 will be used.

- Values must be between 0.5 and 5.0 inclusively.

class FindMovieRecommendationsInput(BaseModel):

movie_title: str = Field(

...,

description="The title of the movie to find recommendations for. If beginning with 'The', then will follow format of 'Title, The'.",

)

min_user_rating: float = Field(

default=4.0,

description="The minimum rating of the movie to find recommendations for. ",

ge=0.5,

le=5.0,

)

limit: int = Field(

default=10,

description="The maximum number of recommendations to return. ",

ge=1,

)Finally, we combine the above information to create our StructuredTool object that will be exposed to the agent. We only provide the func, args_schema, and return_direct here.

The name and description arguments are inferred from the find_movie_recommendations function, and we don’t need to provide an async version of this function for demo purposes (this would be provided in the coroutine argument). We declare return_direct as False since we don’t want to return the raw Cypher results directly to the user:

find_movie_recommendations_tool = StructuredTool.from_function(

func=find_movie_recommendations, # -> The function that the tool calls when executed

# name=..., -> this is populated by the function name

# description=..., -> this is populated by the function docstring

args_schema=FindMovieRecommendationsInput, # -> The input schema for the tool

return_direct=False, # -> Whether to return the raw result to the user

# coroutine=..., -> An async version of the function

)MCP Implementation

We’ll use the Neo4j Cypher MCP server for two of our agent’s tools: read_neo4j_cypher and get_neo4j_schema.

We can use the StdioServerParameters object to ensure that we’re structuring our MCP parameters correctly. We’re using stdio here since we need to spin up a local version of the MCP server for our agent to access.

It’s possible to use multiple MCP servers and remotely hosted MCP servers. Please see the MCP docs and LangChain MCP Adapters docs for more information.

Since we’re using an MCP server with code hosted on PyPI, we may use the uvx command provided by the uv package manager to deploy our server. This command pulls down the server code from PyPI and locally hosts the MCP server for us in the back end:

neo4j_cypher_mcp = StdioServerParameters(

command="uvx",

args=["mcp-neo4j-cypher@0.3.0", "--transport", "stdio"],

env={

"NEO4J_URI": os.getenv("NEO4J_URI"),

"NEO4J_USERNAME": os.getenv("NEO4J_USERNAME"),

"NEO4J_PASSWORD": os.getenv("NEO4J_PASSWORD"),

"NEO4J_DATABASE": os.getenv("NEO4J_DATABASE"),

},

)Within our main function, we have the following code to locally host and access our MCP server. Within the context manager, we can access our MCP tools. Here, we select only the two tools we want to use, then add our local find_movie_recommendations_tool to the tools list:

async def main():

# start up the MCP server locally and run our agent

async with stdio_client(neo4j_cypher_mcp) as (read, write):

async with ClientSession(read, write) as session:

# we can use the MCP server within this context manager

# Initialize the connection

await session.initialize()

# Get tools

mcp_tools = await load_mcp_tools(session)

# We only need to get schema and execute read queries from the Cypher MCP server

allowed_tools = [

tool for tool in mcp_tools if tool.name in {"get_neo4j_schema", "read_neo4j_cypher"}

]

# We can also add non-mcp tools for our agent to use

allowed_tools.append(find_movie_recommendations_tool)

# more code

...Our final tools list contains:

get_neo4j_schemaread_neo4j_cypherfind_movie_recommendations

Utility Functions

We have two utility functions for our agent. Both these functions are based on LangGraph documentation and can be further explored in their respective pages found in the function docstrings below.

The first is pre_model_hook and is used to modify the context passed to the LLM for inference. This function will run before each time the LLM is called.

Notice that we mark include_system as True when we trim our message history. This is because the system message includes valuable information that instructs the LLM on how to perform tasks. If we don’t include this system message, we may get diminishing results once the context grows beyond our declared window of 30,000 tokens.

def pre_model_hook(state: AgentState) -> dict[str, list[AnyMessage]]:

"""

This function will be called every time before the node that calls LLM.

Documentation:

https://langchain-ai.github.io/langgraph/how-tos/create-react-agent-manage-message-history/?h=create_react_agent

Parameters

----------

state : AgentState

The state of the agent.

Returns

-------

dict[str, list[AnyMessage]]

The updated messages to pass to the LLM as context.

"""

trimmed_messages = trim_messages(

state["messages"],

strategy="last",

token_counter=count_tokens_approximately,

max_tokens=30_000,

start_on="human",

end_on=("human", "tool"),

include_system=True, # -> We always want to include the system prompt in the context

)

# You can return updated messages either under:

# `llm_input_messages` -> To keep the original message history unmodified in the graph state and pass the updated history only as the input to the LLM

# `messages` -> To overwrite the original message history in the graph state with the updated history

return {"llm_input_messages": trimmed_messages}The second is print_astream and is used to format and print the conversation to the command line:

async def print_astream(async_stream, output_messages_key: str = "llm_input_messages") -> None:

"""

Print the stream of messages from the agent.

Based on the documentation:

https://langchain-ai.github.io/langgraph/how-tos/create-react-agent-manage-message-history/?h=create_react_agent#keep-the-original-message-history-unmodified

Parameters

----------

async_stream : AsyncGenerator[dict[str, dict[str, list[AnyMessage]]], None]

The stream of messages from the agent.

output_messages_key : str, optional

The key to use for the output messages, by default "llm_input_messages".

"""

async for chunk in async_stream:

for node, update in chunk.items():

print(f"Update from node: {node}")

messages_key = output_messages_key if node == "pre_model_hook" else "messages"

for message in update[messages_key]:

if isinstance(message, tuple):

print(message)

else:

message.pretty_print()

print("\n\n")Agent Creation

Once we have the above code in place, creating the ReAct agent is simple.

Our system prompt provides brief guidance on how to handle Cypher generation errors and how to respond to the user:

SYSTEM_PROMPT = """You are a Neo4j expert that knows how to write Cypher queries to address movie questions.

As a Cypher expert, when writing queries:

* You must always ensure you have the data model schema to inform your queries

* If an error is returned from the database, you may refactor your query or ask the user to provide additional information

* If an empty result is returned, use your best judgement to determine if the query is correct.

If using a tool that does NOT require writing a Cypher query, you do not need the database schema.

As a well respected movie expert:

* Ensure that you provide detailed responses with citations to the underlying data"""We use LangGraph’s built in create_react_agent function to initialize our agent. We’re using OpenAI GPT-4.1 here, but you can swap this for another LLM if you’d like. This may require installing the provider’s LangChain interface library.

async def main():

# start up the MCP server locally and run our agent

async with stdio_client(neo4j_cypher_mcp) as (read, write):

async with ClientSession(read, write) as session:

# hidden code

...

# Create and run the agent

agent = create_react_agent(

"openai:gpt-4.1", # -> The model to use

allowed_tools, # -> The tools to use

pre_model_hook=pre_model_hook, # -> The function to call before the model is called

checkpointer=InMemorySaver(), # -> The checkpoint to use

prompt=SYSTEM_PROMPT, # -> The system prompt to use

)Conversation Loop

We then have some code that allows the user to provide text input to the agent and prints the conversation to the command line. You may exit the chat session by entering exit, quit, or q. The thread_id in the CONFIG variable allows the agent to maintain conversation state using the InMemorySaver checkpointer.

CONFIG = {"configurable": {"thread_id": "1"}}

async def main():

# start up the MCP server locally and run our agent

async with stdio_client(neo4j_cypher_mcp) as (read, write):

async with ClientSession(read, write) as session:

# hidden code

...

# conversation loop

print(

"\n===================================== Chat =====================================\n"

)

while True:

user_input = input("> ")

if user_input.lower() in {"exit", "quit", "q"}:

break

await print_astream(

agent.astream({"messages": user_input}, config=CONFIG, stream_mode="updates")

)Running the Agent

Finally, we can run our agent:

uv run python3 agent.py

# or

python3 agent.pyThis will begin a chat session in the command line.

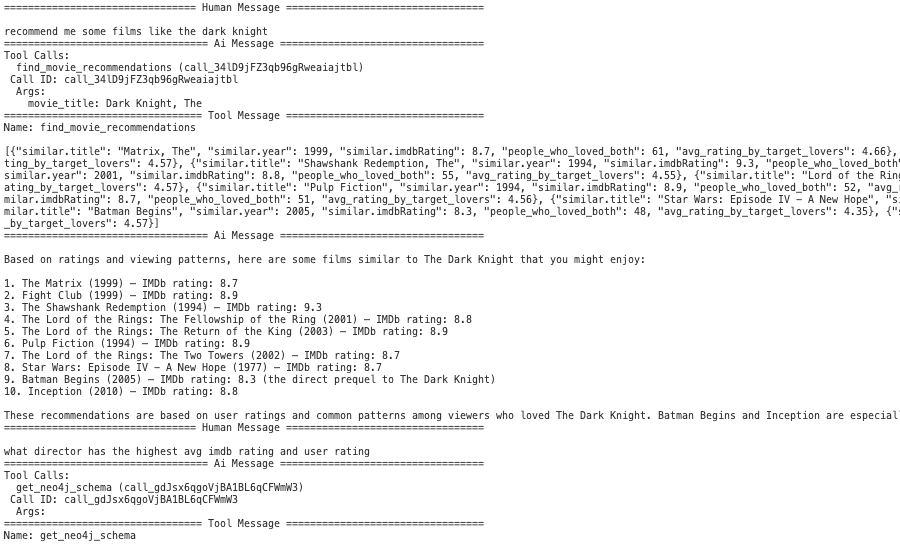

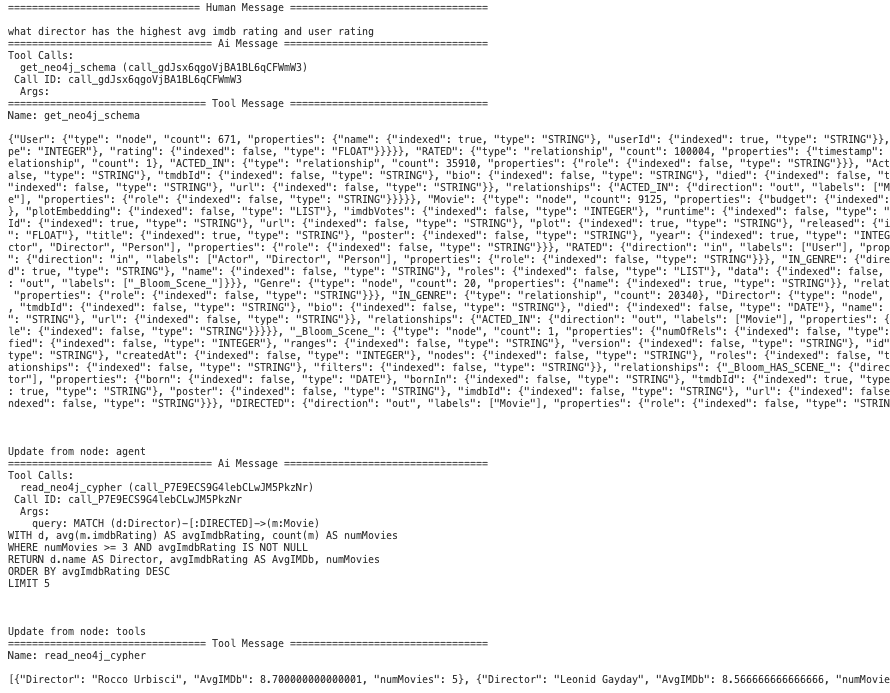

We see that the agent is able to appropriately select the right tools for the request. Here it is using the find_movie_recommendations tool when it needs to find recommendations.

And here it is using get_neo4j_schema and read_neo4j_cypher when it needs to generate novel Cypher to query the database. Notice that it first retrieves the graph schema before generating a query.

Summary

This repo provides a simple ReAct agent implemented using primarily LangGraph and the Neo4j Cypher MCP Server. It demonstrates how to incorporate PyPI-hosted MCP server tools and local tools with an agent in an easy-to-use chat interface. This repo was built to be easily modifiable and can be a template for other agent implementations.

In order to modify this repo for other Neo4j databases:

- Modify the .env file with your Neo4j connection credentials

- Remove the

find_movie_recommendationstool - Modify the Movie Expert section of the system prompt

To modify this repo for other non-Neo4j implementations:

- Modify the .env file

- Replace the Neo4j Cypher MCP Server configuration and tools

- Remove the

find_movie_recommendationstool - Modify the system prompt

Essential GraphRAG

Unlock the full potential of RAG with knowledge graphs. Get the definitive guide from Manning, free for a limited time.

Resources

- Model Context Protocol (MCP) Integrations for the Neo4j Graph Database

- What Is a Graph Database?

- Get Started with Neo4j’s Cypher MCP Server

- Explore the Neo4j Data Modeling MCP Server

- Neo4j Cypher Query Language

Quickly Build a ReAct Agent With LangGraph and MCP was originally published in Neo4j Developer Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.