Streamline Data Ingestion With the Neo4j Aura Import API

Product Manager for Neo4j Developer Tools, Neo4j

8 min read

Create a free graph database instance in Neo4j AuraDB

We’re pleased to introduce you to the new Aura Import API, bringing you programmatic access to the Neo4j Aura Import Service, simplifying the process of bringing your data into Neo4j Aura, and allowing you to focus on building amazing graph applications.

Recap on the Aura Import Service

The Aura Import Service, available within Neo4j Aura, offers broad support for various data sources. Whether your data resides in traditional RDBMSes like PostgreSQL, MySQL, SQL Server, and Oracle; cloud data warehouses such as Snowflake, Databricks, Azure Synapse, and Amazon Redshift; or cloud storage from the big three providers (AWS S3, Microsoft Azure Blob Storage, Azure Data Lake, and Google Cloud Storage), the Import Service has you covered.

It allows you to:

- Configure data sources: Easily connect to your chosen data origins

- Browse table metadata: Understand the structure of your source data

- Build graph models and mappings: Visually define how your source data translates into a graph

- Leverage GenAI: Get a head start on graph model creation with AI-powered assistance

Introducing the Aura Import API

The new Aura Import API expands on the capabilities of the Import Service, providing programmatic access to trigger and manage your import jobs. To use the API, you’ll need to have a data source and graph model/mapping configured via the Import Service UI. Once you’re happy with your model and mapping, you can grab its ID and use the API to initiate import jobs programmatically.

The Import Job API is part of the new v2beta1 API. The v1 API remains available for a broader range of instance management, which is yet to be migrated.

A key advantage of the import jobs is their idempotent nature. This means that running the same job twice on the same data will yield identical results. This characteristic is useful for incremental data loads, as new records will be matched against your existing graph and updated or linked appropriately, without creating duplicates.

An Example With Google Cloud Workflows

We’ll briefly walk through an example of using the API via Google Cloud Workflows, a fully managed orchestration service from Google Cloud.

Setting Up Your Import Model

Before starting, make a note of your Aura Organization ID and Project ID, which is in the linked Aura screens. You’ll need these to call the Import API.

- Navigate to the Import app: Start by accessing the Import app within Neo4j Aura.

2. Create a new data source: In this example, we’ll connect to Google Cloud BigQuery using a service account JSON key with the necessary permissions to read and run queries on the dataset.

3. Create a graph model: Once connected, you can immediately begin creating your graph model. Feel free to use the AI assistance to jumpstart the process and refine your model as needed.

In this somewhat meta example, I’m building a graph of the metadata associated with the Neo4j Import Service itself, illustrating how import jobs run, including the data sources, models, and databases involved.

Once your import job is defined and you’ve tested it to ensure that it runs as expected and produces the correct results, you’re ready to start running it via the API. Make a note of the model ID from the URL of your model page (e.g., https://console-preview.neo4j.io/tools/import/models/<import model id>), as this will be crucial for the job API.

For this specific job, I created a view of my underlying BigQuery datasets that contained the last 36 hours of entries, enabling me to call the API daily with some margin for timing.

API Endpoints

The Import Service currently provides three API endpoints:

- [POST] import/jobs to run an import job

- [GET] import/jobs/{{AURA_API_IMPORT_JOB_ID}} to get the import job status with an option to list progress details

- [POST] import/jobs/{{AURA_API_IMPORT_JOB_ID}}/cancellation to cancel a running job

You can get more information on the API specification, as well as details on how to authenticate with the API.

Google Cloud Workflows Example

In this example, we’ll use Google Cloud Workflows to configure and trigger an import job. While this isn’t a detailed tutorial on Google Cloud Workflows, the following commented YAML for a workflow illustrates basic API usage (also available in a GitHub repository):

# workflows/aura_import.yaml

main:

steps:

# Retrieve API secret

- access_string_secret:

call: googleapis.secretmanager.v1.projects.secrets.versions.accessString

args:

project_id: "product-greg-king"

secret_id: "aura_api_secret"

version: "latest"

result: aura_user_secret

- buildVars:

assign:

# OAuth variables

- oauthUrl: https://api.neo4j.io/oauth/token

- tokenForm: "grant_type=client_credentials"

- basicAuth: ${"Basic " + base64.encode(text.encode(aura_user_secret + ":"))}

# Aura API variables

- aura_api_domain: ${sys.get_env("AURA_API_DOMAIN")}

- aura_api_version: ${sys.get_env("AURA_API_VERSION")}

- apiBase: ${aura_api_domain + "/" + aura_api_version}

# Details of Aura Organization and project

- aura_organization_id: ${sys.get_env("AURA_ORGANIZATION_ID")}

- aura_project_id: ${sys.get_env("AURA_PROJECT_ID")}

# Import model id for the import job

- import_model_id: ${sys.get_env("IMPORT_MODEL_ID")}

# Target Aura Database id for the import job

- aura_db_id: ${sys.get_env("AURA_DB_ID")}

# Datrabase user - only required for instances that do not support service account auth (currently Free and VDC tiers)

# - aura_db_user: ${sys.get_env("AURA_DB_USER")}

# - aura_db_password: ${sys.get_env("AURA_DB_PASSWORD")} # Good practice to get from secrets manager if required, as done for aura_api_secret_above

# Obtain OAuth token

- getToken:

call: http.post

args:

url: ${oauthUrl}

headers:

Content-Type: application/x-www-form-urlencoded

Authorization: ${basicAuth}

body: ${tokenForm}

result: tokenResp

# Parse token

- parseToken:

assign:

- auth_header: ${"Bearer " + tokenResp.body.access_token}

- import_api_url: ${apiBase + "/organizations/" + aura_organization_id + "/projects/" + aura_project_id + "/import/jobs"}

# Run import hob

- runImport:

call: http.post

args:

url: ${import_api_url}

headers:

Authorization: ${auth_header}

Content-Type: application/json

body:

importModelId: ${import_model_id}

auraCredentials:

dbId: ${aura_db_id}

# user: ${aura_db_user}

# password: ${aura_db_password}

result: importRespNote: It is currently required to pass your database username and password for Free and VDC tier instances.

This workflow demonstrates how to obtain the necessary OAuth token from a secret stored in Google Cloud Secret Manager, then create an import job. In my example, I configured this workflow to run daily on a cron schedule.

The workflow could also incorporate the status and cancellation endpoints to track progress and implement retries or cancellations as needed.

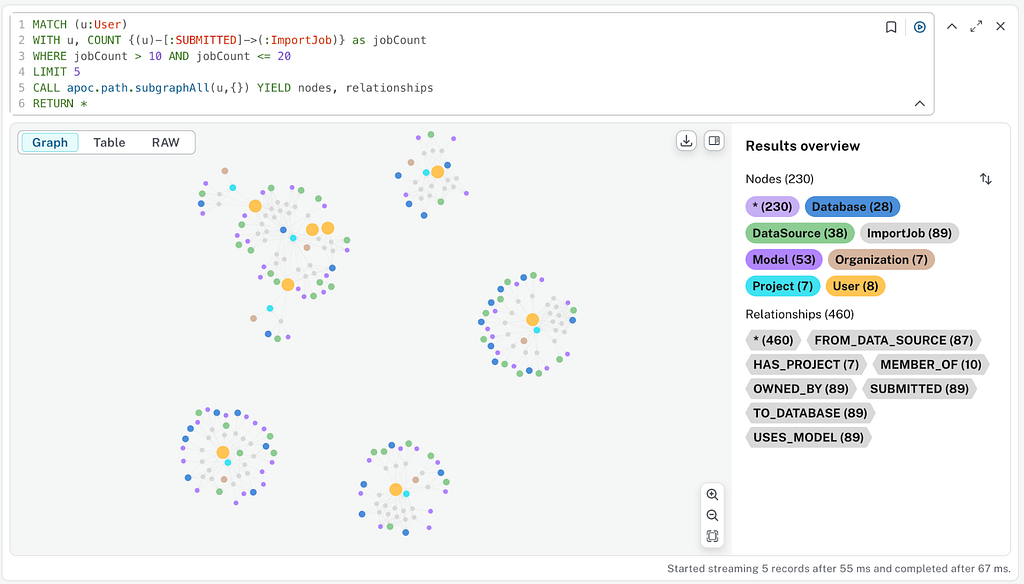

Running this import job daily allows me to gain a rich understanding of how import jobs are being used — in the example below, finding the complete subgraph for five users who’ve run between 10 and 20 import jobs.

Aura CLI for Import Jobs

The Import API functionality is also available directly through the Aura CLI, providing a convenient command-line interface for managing your import jobs.

To enable import features in the Aura CLI, first ensure beta features are enabled by running:

aura-cli config set beta-enabled trueTriggering an Import Job

aura-cli import job create \

— organization-id 64f7d887–2df0–41ae-ac4e-fd34ce55a333 \

— project-id 64f7d887–2df0–41ae-ac4e-fd34ce55a333 \

— import-model-id fc371c86–7966–40b5–82b3–93f196d0b928 \

— db-id 414d93b4

ID

667a9266–07bc-48a0-ae5f-3d1e8e73fac4Reviewing Progress of an Import Job

You can review the progress of an import job, optionally providing the — progress flag for more detailed output:

$ aura-cli import job get 667a9266–07bc-48a0-ae5f-3d1e8e73fac4 \

— organization-id 64f7d887–2df0–41ae-ac4e-fd34ce55a333 \

project-id 64f7d887–2df0–41ae-ac4e-fd34ce55a333 \

progress

┌──────────────────────────────────────┬─────────────┬────────────┬────────────────────────┬──────────────────────────┬──────────────────────────────────┬───────────────────┐

│ ID │ IMPORT_TYPE │ INFO:STATE │ INFO:EXIT_STATUS:STATE │ INFO:PERCENTAGE_COMPLETE │ DATA_SOURCE:NAME │ AURA_TARGET:DB_ID │

├──────────────────────────────────────┼─────────────┼────────────┼────────────────────────┼──────────────────────────┼──────────────────────────────────┼───────────────────┤

│ 667a9266-07bc-48a0-ae5f-3d1e8e73fac4 │ cloud │ Completed │ Success │ 100 │ neo4j-padw-dev.adhoc_scratchpads │ 414d93b4 │

└──────────────────────────────────────┴─────────────┴────────────┴────────────────────────┴──────────────────────────┴──────────────────────────────────┴───────────────────┘

┌──────────────────────────┐

│ INFO:EXIT_STATUS:MESSAGE │

├──────────────────────────┤

│ Success │

└──────────────────────────┘

# Progress details:

# Nodes progress:

┌─────┬──────────────────┬────────────────┬────────────┬───────────────┬─────────────────────┬─────────────────┐

│ ID │ LABELS │ PROCESSED_ROWS │ TOTAL_ROWS │ CREATED_NODES │ CREATED_CONSTRAINTS │ CREATED_INDEXES │

├─────┼──────────────────┼────────────────┼────────────┼───────────────┼─────────────────────┼─────────────────┤

│ n:1 │ [ │ 2335 │ 2335 │ 0 │ 0 │ 0 │

│ │ "ImportJob" │ │ │ │ │ │

│ │ ] │ │ │ │ │ │

│ n:3 │ [ │ 2335 │ 2335 │ 0 │ 0 │ 0 │

│ │ "DataSource" │ │ │ │ │ │

│ │ ] │ │ │ │ │ │

│ n:2 │ [ │ 2322 │ 2322 │ 0 │ 0 │ 0 │

│ │ "Model" │ │ │ │ │ │

│ │ ] │ │ │ │ │ │

---truncated---Summary

The new Aura Import API brings you programmatic access to the Neo4j Aura Import Service, simplifying the process of bringing your data into Neo4j Aura, and allowing you to focus on building amazing graph applications.

We’ve shown you how to get started quickly, and we hope you enjoy using the Aura Import API to start automating your import workflows. Be sure to leave any feedback you may have.

Streamline Data Ingestion With the Neo4j Aura Import API was originally published in Neo4j Developer Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.