Using a Graph Database for Deep Learning Text Classification

Developer Relations

4 min read

This post was written by Kenny Bastani, Developer Evangelist at Neo Technology. Kenny’s original article and past work can be found on his blog.

Using a Graph Database for Deep Learning Text Classification

The feature hierarchy is generated probabilistically as a result of a statistical analysis of neighboring words to a feature. By doing this it becomes possible to recognize a large set of features in test data by eliminating possibilities at each layer.

The lowest level representation of a feature is closest to the root pattern. In the case of Graphify, the root pattern is a space character. As training increases the number of examples that match the space character, deeper levels of representations will be generated by choosing features with the highest probability of being matched to the left or right of a feature. This kind of deep learning doesn’t require a neural network because of the nature of Neo4j’s property graph data model, providing a way to generate a vector space model of extracted features and relate them to feature vectors by means ofcosine similarity of the classes which are mapped to a subset of feature nodes within the hierarchy.

An advantage of using Neo4j to do this is that you can attach classes to the features that matched text with those classes being applied as labels during training.

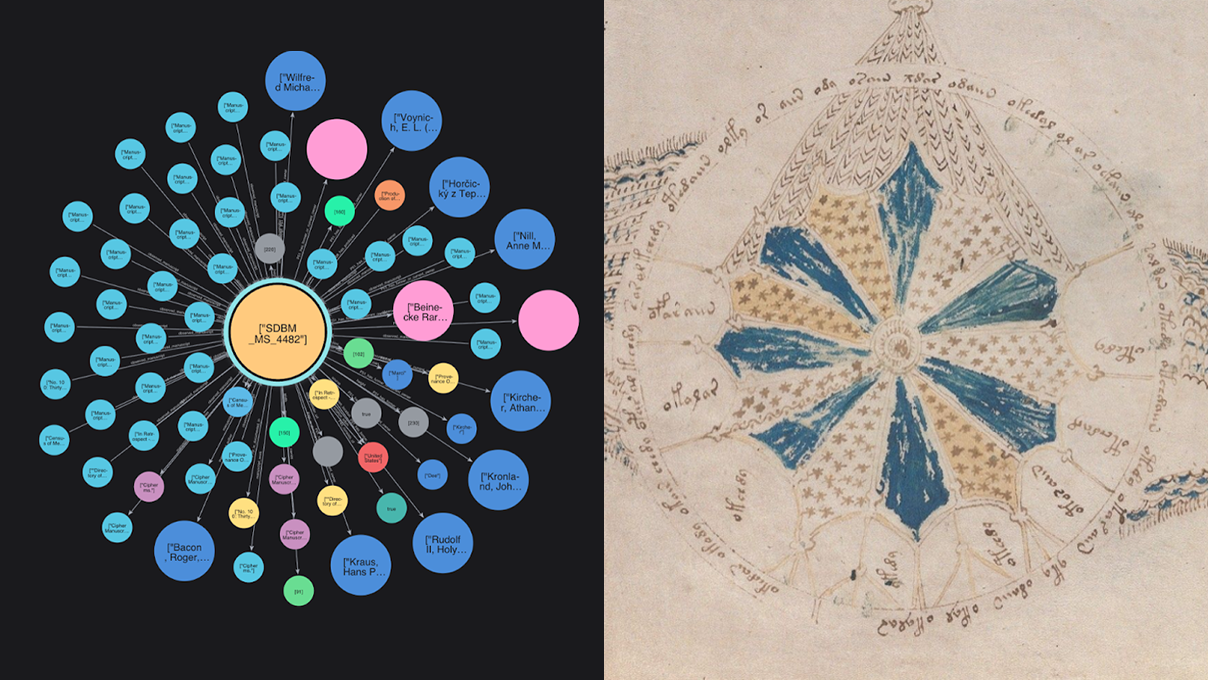

Using a 3D visualization tool called UbiGraph, a visualization of the feature hierarchy shows how deep feature representations grow over time.

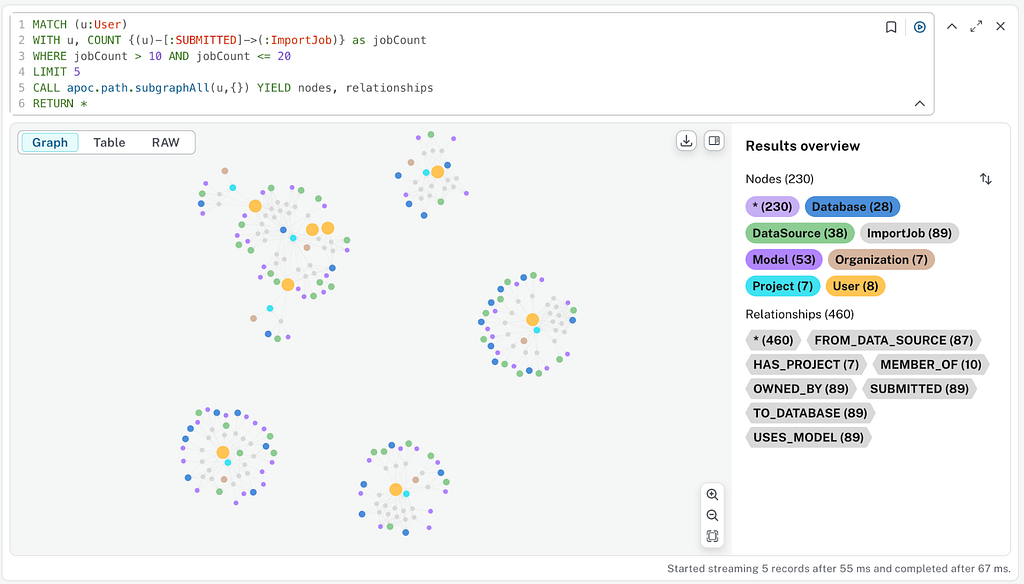

Vector Space Model

Graphify generates a Vector Space Model when classifying text on training data. There are two endpoints that provide classification and similarity features.

Classify unlabeled text

The first endpoint is https://localhost:7474/service/graphify/classify which supports the HTTP method POST. By posting the following JSON model, the text property will automatically be classified to the feature vector of all previously trained classes and sorted by the cosine similarity between these vectors.

{

"text": "Interoperability is the ability of making systems work together."

}The result that will be returned from Neo4j will be a sorted list of matches that are ordered on the cosine similarity of feature vectors for each class in the database.

{

"classes": [

{

"class": "Interoperability",

"similarity": 0.01478629324290398

},

{

"class": "Natural language",

"similarity": 0.014352533094325508

},

{

"class": "Artificial intelligence",

"similarity": 0.008389954131481638

},

{

"class": "Graph database",

"similarity": 0.006780234851792194

},

{

"class": "Inference engine",

"similarity": 0.005775135975571818

},

{

"class": "Neo4j",

"similarity": 0.005011493979094744

},

{

"class": "Expert system",

"similarity": 0.0045493507614881076

},

{

"class": "Knowledge representation and reasoning",

"similarity": 0.0035488311479422202

},

{

"class": "Speech recognition",

"similarity": 0.0035459146405026746

},

{

"class": "Knowledge acquisition",

"similarity": 0.0033585907499658666

},

{

"class": "Memory",

"similarity": 0.003286652624915932

},

{

"class": "Cognitive robotics",

"similarity": 0.0026605991849062826

},

{

"class": "Hierarchical control system",

"similarity": 0.0024852750266223995

},

{

"class": "NoSQL",

"similarity": 0.002359964627061625

},

{

"class": "Hierarchical database model",

"similarity": 0.0016629332691377717

},

{

"class": "Never-Ending Language Learning",

"similarity": 0.0014433749914281816

},

{

"class": "Multilayer perceptron",

"similarity": 0.0014070718231579983

},

{

"class": "Sentence (linguistics)",

"similarity": 0.0012682029230640021

},

{

"class": "Argument",

"similarity": 0.0012446298877431268

},

{

"class": "Deep learning",

"similarity": 0.0011171501184315629

},

{

"class": "Inductive reasoning",

"similarity": 0.0010671296082781958

},

{

"class": "Machine translation",

"similarity": 0.0010150803638098256

},

{

"class": "Automatic Language Translator",

"similarity": 0.001008811074376599

},

{

"class": "Relational database",

"similarity": 0.0009875922800915275

},

{

"class": "Storage (memory)",

"similarity": 0.000980910572273953

},

{

"class": "Clause",

"similarity": 0.0009355842513276578

},

{

"class": "Dependency grammar",

"similarity": 0.0006764745128168179

},

{

"class": "Autoencoder",

"similarity": 0.0005224831369792641

},

{

"class": "Phrase",

"similarity": 0.00029583989661492754

}

]

}

Get similar classes

To get most related classes, which were provided during training as labels, the following endpoint: https://localhost:7474/service/graphify/similar/{class} provides a way to get the most similar classes to a provided class name. Again, this uses a vector space model generated from the hierarchy of features mined in the pattern recognition tree. The result is a sorted list of classes ordered by the cosine similarity of each of the feature vectors associated with a class. For example, issuing a HTTP GET request to the following endpoint, https://localhost:7474/service/graphify/similar/NoSQL returns the following results:

{

"classes": [

{

"class": "Graph database",

"similarity": 0.09574535643836013

},

{

"class": "Relational database",

"similarity": 0.07991318266439677

},

{

"class": "Machine translation",

"similarity": 0.07693041732140395

},

{

"class": "Deep learning",

"similarity": 0.07027180553561777

},

{

"class": "Speech recognition",

"similarity": 0.06491846260229797

},

{

"class": "Knowledge representation and reasoning",

"similarity": 0.061825794099321346

},

{

"class": "Artificial intelligence",

"similarity": 0.059426927894936345

},

{

"class": "Multilayer perceptron",

"similarity": 0.056943365042175544

},

{

"class": "Hierarchical database model",

"similarity": 0.05617955585333319

},

{

"class": "Interoperability",

"similarity": 0.05541367925131132

},

{

"class": "Memory",

"similarity": 0.05514558364443694

},

{

"class": "Expert system",

"similarity": 0.04869202636766413

},

{

"class": "Inductive reasoning",

"similarity": 0.04542968846354395

},

{

"class": "Argument",

"similarity": 0.04473621436021445

},

{

"class": "Clause",

"similarity": 0.03686385050753761

},

{

"class": "Dependency grammar",

"similarity": 0.035584209032388084

},

{

"class": "Sentence (linguistics)",

"similarity": 0.03329025076397098

},

{

"class": "Inference engine",

"similarity": 0.031225512897898145

},

{

"class": "Neo4j",

"similarity": 0.03101280823703653

},

{

"class": "Storage (memory)",

"similarity": 0.02979918393661567

},

{

"class": "Hierarchical control system",

"similarity": 0.028800749676585427

},

{

"class": "Autoencoder",

"similarity": 0.02527201414259688

},

{

"class": "Cognitive robotics",

"similarity": 0.023697018076748396

},

{

"class": "Never-Ending Language Learning",

"similarity": 0.021246276238820964

},

{

"class": "Phrase",

"similarity": 0.019941608021991825

},

{

"class": "Natural language",

"similarity": 0.019809613865907624

},

{

"class": "Automatic Language Translator",

"similarity": 0.017520049172816868

},

{

"class": "Knowledge acquisition",

"similarity": 0.01264614704679436

}

]

}

Training

The training endpoint is located at https://localhost:7474/service/graphify/training. By issuing an HTTP POSTrequest to this endpoint with the following model:

{

"text": [

"Interoperability is the ability of making systems and organizations work together."

],

"label": [

"Interoperability"

]

}

Features are learned through repetition. The more text containing similar phrases (n-grams), the more likely those features will be extracted and associated with any classes contained in prior training data.

Links

If you’re interested in being a pioneer and testing out this unmanaged extension, head on over to the GitHub project page and follow the installation instructions.

https://github.com/kbastani/graphify

To better provide examples of how to implement this extension for your use cases, I will soon be authoring a sample project that creates a vector space model from a collection of Wikipedia documents.

If you’re interested in helping contribute, please tweet me at @kennybastani