The Story behind Russian Twitter Trolls: How They Got Away with Looking Human – and How to Catch Them in the Future

Developer Relations Engineer

5 min read

It’s no secret that Russian operatives used Twitter and other social media platforms in attempt to influence the most recent U.S. presidential election cycle with fake news. The question most people aren’t asking is: How did they do it?

More importantly: How can governments and social media vendors detect this sort of behavior in the future before it has unforeseen consequences?

The Backstory of the Russian Troll Network

First, some background. During the 2016 U.S. presidential elections Twitter was used to propagate fake news to presumably influence the presidential election. The House Intelligence committee then released a list of 2,752 false Twitter accounts believed to be operated by a known Russian troll factory – the Internet Research Agency. Twitter immediately suspended these accounts, removing their information and tweets from public view.

Even though these accounts and tweets were removed from Twitter.com and the Twitter API, journalists at NBC News were able to assemble a subset of the tweets and using Neo4j, were able to analyze the data to help make sense of what these Russian accounts were doing.

NBC News recently open sourced the data in the hopes that others could learn from this dataset and inspire those who have been caching tweets to contribute to a combined database.

Two days later, the Mueller indictment named the Internet Research Agency and several of the Twitter accounts and hashtags used in the NBC News data specifically. While most news articles explain what happened, the important questions is how did it happen in order to prevent future abuse.

How Did the Interference Work?

The interference worked in several stages with the development of Twitter accounts that used common hashtags and the posting of reply tweets to popular accounts to gain visibility and followers.

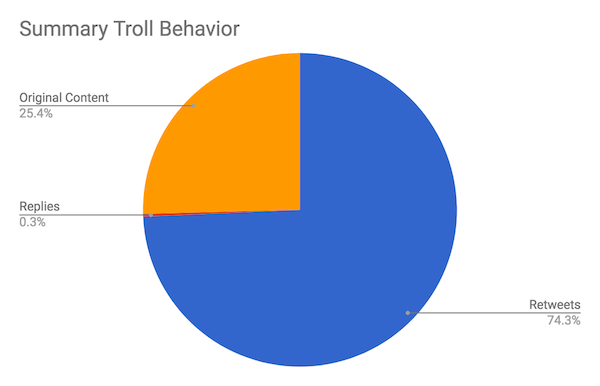

This chart below shows that the vast majority of the tweets were retweets, and only roughly 25% were original content tweets.

When we break this analysis down on a per-account level, we see that many accounts were only retweeting others, amplifying the messages but not posting much themselves.

These accounts can be classified into roughly three categories:

1.) The Typical American Citizen

![]() This screenshot is the profile image of user @LeroyLovesUSA, one of the accounts Twitter has identified as being operated by the Internet Research Agency in Russia. Many accounts were intended to appear as normal everyday Americans just like this one.

This screenshot is the profile image of user @LeroyLovesUSA, one of the accounts Twitter has identified as being operated by the Internet Research Agency in Russia. Many accounts were intended to appear as normal everyday Americans just like this one.

These accounts often tried to associate themselves with real-world events and – now that the Mueller indictment has revealed many of these operatives traveled to the U.S. – it is possible they actually participated in these events. In the image above, @LeroyLovesUSA is taking credit for posting a controversial banner from a bridge in Washington, DC.

2.) The Local Media Outlet

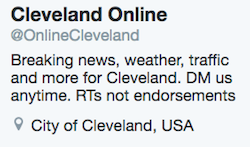

Another type of Russian troll account presented themselves as local news outlets. Here @OnlineCleveland – another of the IRA-controlled accounts – appears to be a local news outlet in Cleveland. These accounts often posted exaggerated reports of violence.

Another type of Russian troll account presented themselves as local news outlets. Here @OnlineCleveland – another of the IRA-controlled accounts – appears to be a local news outlet in Cleveland. These accounts often posted exaggerated reports of violence.

Other examples of accounts in this category include @KansasDailyNews and @WorldnewsPoli.

3.) The Local Political Party

The third type of Russian troll account appeared to be affiliated with local political parties. Here is the account @TEN_GOP, intended to appear as an account connected to the Tennessee Republican party. This account is specifically named in the Mueller indictment.

The third type of Russian troll account appeared to be affiliated with local political parties. Here is the account @TEN_GOP, intended to appear as an account connected to the Tennessee Republican party. This account is specifically named in the Mueller indictment.

The Amplification Network

Analyzing the data, we were able to determine that most of the original tweets written in the Russian troll network were written by a small number of users, such as @TEN_GOP. As mentioned above, the majority of overall tweets were retweets, because many of the Russian troll accounts were solely retweeting other accounts in an attempt to amplify the message.

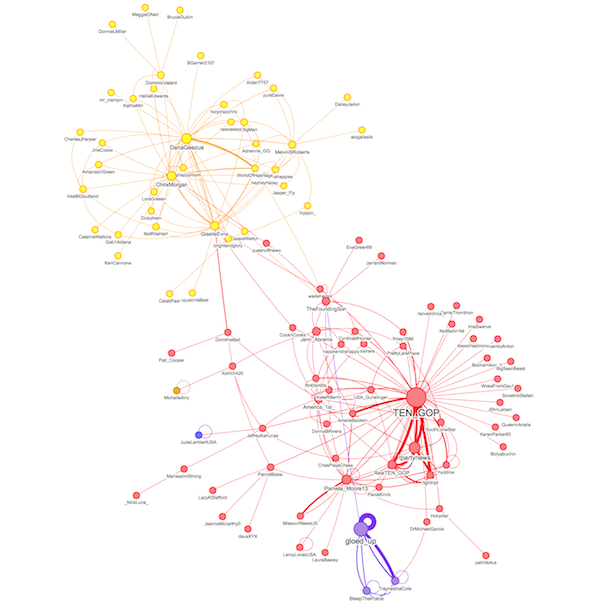

When we apply graph analysis to this retweet network, we can see that the graph partitions into three distinct clusters, or communities. Further, we can run the PageRank centrality algorithm to identify the most influential accounts within each cluster.

A community detection algorithm shows there are three clear communities in the Russian troll retweet network. Node size is proportional to the PageRank score for each node, showing the importance of the account in the network.

When we then look into the hashtags these Russian trolls were using, we can see that the red group was tweeting mainly about right-wing politics (#VoterFraud, #TrumpTrain); the yellow group was more left leaning, but not necessarily positively (#ObamasWishlist, #RejectedDebateTopics); and the purple group covered topics in the Black Lives Matter community (#BlackLivesMatter, #Racism, #BLM). Each of these three clusters tended to have a small number of original content generators, with the bulk of the community amplifying the message.

For example, one account @TheFoundingSon sent more than 3,200 original tweets, averaging about 7 tweets per day. On the other hand, accounts like @AmelieBaldwin authored only 21 original tweets out of more than 9,000 sent.

The Mueller Indictment

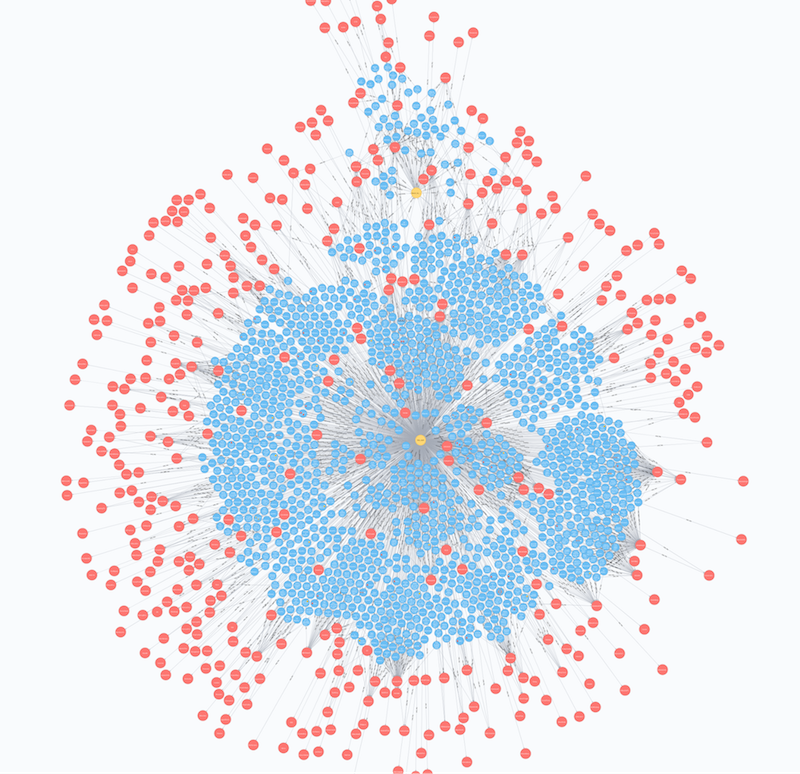

The Mueller indictment specifically names two Twitter accounts: @TEN_GOP and @March_For_Trump. The NBC News dataset captured thousands of tweets from these two users.

This graph shows tweets from the accounts named in the Mueller indictment and the hashtags they used. You can see a small overlap in the hashtags used by the two accounts.

Conclusion

So, what can social media platforms and governments do to monitor and prevent future abuse?

First, it’s a matter of connections. In today’s hyper-connected world, it’s difficult to identify relationships in a dataset if you’re not using a technology purpose-built for storing connected data. It’s even more difficult if you’re not looking for connections in the first place.

Second, once you’re storing and looking for connections within your datasets, it’s essential to detect and understand the patterns of behavior reflected by those connections. In this case, a simple graph algorithm (PageRank) was able to illustrate that most of the Russian troll accounts behaved like single-minded bees with a focused job – and not like normal humans.

Using a connections-first approach to analyzing these sorts of datasets, both governments and social media platforms can more proactively detect and deter this sort of meddling behavior before it has a chance to derail democracy or poison civil conversation.

Explore the tweets for yourself with a graph database of the Russian troll dataset available via the Neo4j Sandbox. Click below to get started.