Toward AI Standards: Graph Technology for Responsible AI

Graph Analytics & AI Program Director

4 min read

Last week, Neo4j CEO Emil Eifrem published the blog, “Towards AI Standards, Why Context Is Critical for Artificial Intelligence” that spoke about our recent response to the request for information from the U.S. The National Institute of Standards and Technology (NIST).

The NIST is looking to create a plan for Federal engagement that supports reliable, robust and trustworthy artificial intelligence (AI) technologies by way of a common technical standard.

Our response was to convey that AI must be guided by not just technical standards but ethical standards, and context is the key. From Emil:

“Context – in both data and in life – is derived from connections, and what’s better at natively storing, traversing and analyzing connection than graph technology? Nothing.”

In this four-part series, we’ll break down the case for graph technology as the foundation for responsible AI – a commitment to transparency, trackability, reliability, fairness and situational appropriateness.

This week, we’re kicking off the series by breaking down graph technology and creating context for responsible AI.

Graph Technology and Creating Context for AI

For artificial intelligence (AI) to be more situationally appropriate and “learn” in a way that leverages adjacency to understand and refine outputs, it needs to be underpinned by context. Context is all of the peripheral information relevant to that specific AI.

AI standards that don’t explicitly include contextual information result in subpar outcomes as solution providers leave out this adjacent information. The result is more narrowly focused and rigid AI, uninterpretable predictions and less accountability.

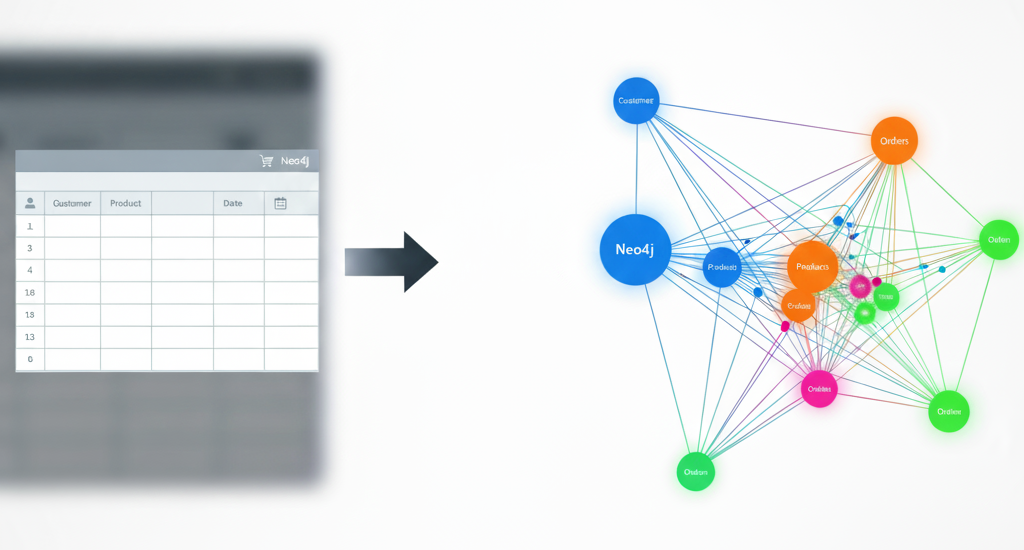

Graph technologies are a state-of-the-art, purpose-built method for adding and leveraging context from data. Proven repeatedly in deployments worldwide, graph technology is a powerful foundation for AI.

This series explains how graph technology provides much-needed context to AI applications. It includes examples of how AI outcomes are more effective, trustworthy and robust when underpinned by the data context that graph platforms deliver.

Artificial Intelligence and Contextual Information

AI today is effective for specific, well-defined tasks but struggles with ambiguity. Humans deal with ambiguities by using context to figure out what’s important in a situation and then also extend that learning to understanding new situations.

For example, if we are making travel plans, our decisions vary significantly depending on whether the trip is for work or pleasure. And once we learn a complicated or nuanced task, like driving a manual transmission, we easily apply that to other scenarios, such as other vehicles. We are masters of abstraction and recycling lessons learned.

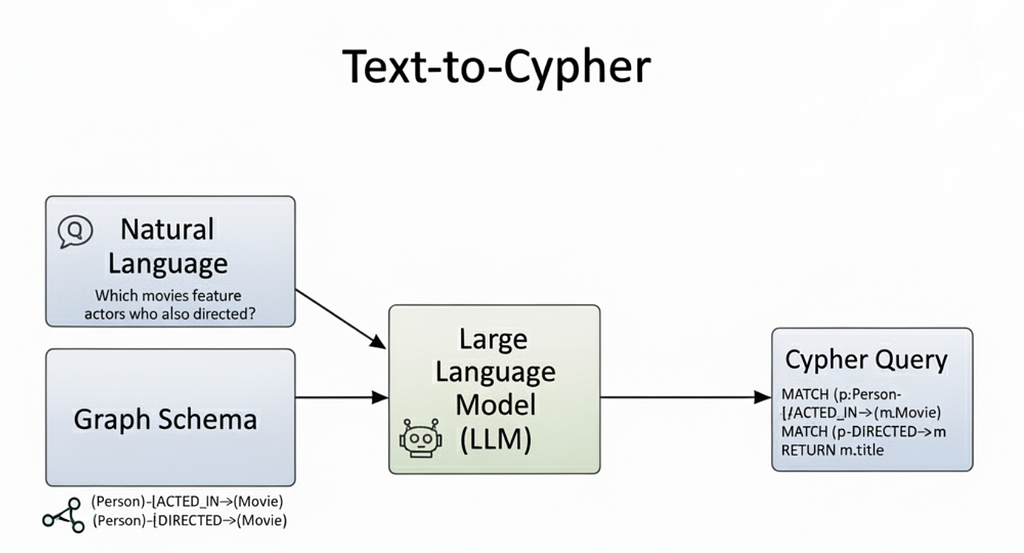

For artificial intelligence to make human-like decisions that are more situationally appropriate, it needs to incorporate context – all of the adjacent information. Context-driven AI also helps ensure the explainability and transparency of any given decision, since human overseers can better map and visualize the decision path.

Without context, AI requires exhaustive training, strictly prescriptive rules and specific applications. Moreover, since it’s impossible to anticipate every possible situation, we often find AI solutions wanting when new situations arise. Sometimes outcomes are even malefic such as biased recommendations or harmful interpretations.

For example, Microsoft’s Twitter bot, Tay, learned from Twitter users how to respond to tweets. After interacting with real social media users, however, Tay learned offensive language and racial slurs. Another example is Amazon’s AI-powered recruiting tool, which was shut down after showing bias against women candidates (as reported by Reuters).

In both of these cases, the machine learning models were trained on existing data that lacked the appropriate context. Tay’s model drew more often from the loudest and most outrageous opinions, reinforcing those as the norm. Amazon’s recruiting tool amplified and even codified discriminatory practices because its existing dataset was too narrow.

And how do we identify whether an AI-based solution is suboptimal, incorrect or bad? Do we wait until something terrible happens?

Knowing if an AI project has gone off-course requires a larger frame of reference to identify how millions of data points and procedures come together. If we fail to evaluate AI outcomes within a larger context, we risk accelerating unintended outcomes as data and technologies become more complex.

Explainability is also critical for accountability. Particularly in nuanced situations, such as creditworthiness or criminal sentencing, unchecked AI runs the potential of putting entire groups at a disadvantage.

Conclusion

Context-driven AI helps us understand and explain the factors and pathways of logic processing so organizations better understand AI decisions.

Next week we’ll discuss graph technology as a fabric for context and connections by laying the foundation for more sophisticated, interpretable and flexible patterns of reasoning.

Catch up with the rest of the Toward AI Standards series:

Read the white paper, Artificial Intelligence & Graph Technology:

Enhancing AI with Context & Connections