20 Episodes of Going Meta – A Recap on Semantics, Ontologies, and Knowledge Graphs

Senior Developer Marketing Manager

4 min read

20 Episodes of Going Meta – A Recap

In this live-stream series, Jesús Barrasa and I explored the many aspects of Semantics, Ontologies and Knowledge Graphs. Inadvertently, a few themes emerged, and we thought talking about them would help you find your way in the already sizeable collection of episodes.

Going Meta

Back in February 2022, when Jesús Barrasa and I started with the first episode, we didn’t quite know where we would be going: would it be more hands-on, more conversational…? We knew that the main themes would be Semantics, Ontologies, and Knowledge Graphs and that our target audience would be practitioners. We wanted to make sure that we stayed away from the vagueness and extremely theoretical/academic approach that so often makes this space one of abstract discussion but of little real value. We really wanted people to leave each episode equipped with ideas but also with assets to help them apply the concepts discussed to their problems straight away.

The “why?” behind the choice of themes was twofold: first, we had noticed that more and more knowledge graph projects implemented on Neo4j integrated elements from the semantic stack (RDF, Ontologies, inference…), and we also had Jesús with us, who brings a quite unique combination of backgrounds in both RDF and Neo4j. He is the mastermind behind neosemantics, which integrates RDF and Linked Data with Neo4j. He also recently co-authored an O’Reilly book on Knowledge Graphs for practitioners.

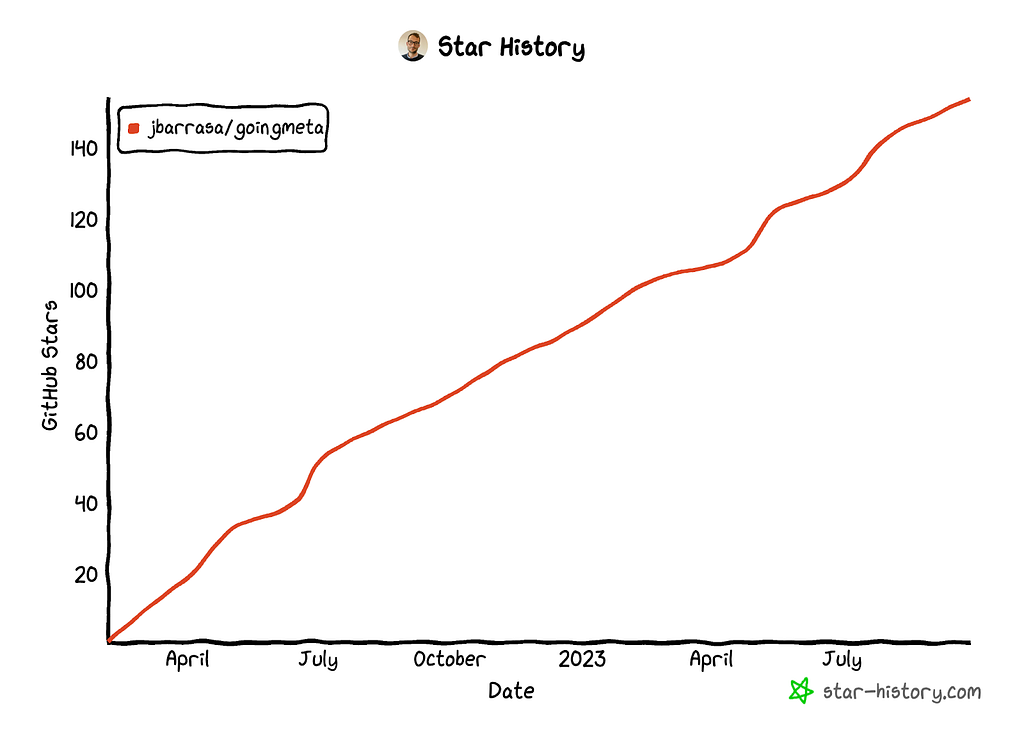

The reception has been amazing, 40k aggregated views on YouTube so far, and loads of stars on GitHub… because from the very beginning, we created a Github Repository where we share all the assets (code, queries, datasets, ontologies, notebooks…) used during each episode.

A Recap – Themes Emerge

For episode 20, we wanted to take a look back at what we had published over time, try to organise the content and put a bit of structure around it. This was an interesting exercise for us too, as we usually decide relatively shortly before the stream what we will be covering. Our core themes would obviously be there but was there any latent pattern? The answer was yes, and we quickly identified four different tracks.

You can use this blog as your guide to browse through the content and find what is most relevant to you. Leave us comments (or just a thumbs up 🙂 ), if you have any remarks or if a certain topic (new or already covered) is of particular interest to you.

Data Engineering Track

You’re a data engineer, so you care about data pipelines, data quality, data movement (migrations and so on). In this track you will find episodes that deal with the creation of data pipelines driven by semantic definitions of the target schema (ontologies), import/export of graph data to/from Neo4j and RDF sources/sinks. We focussed (a lot) on how you can manage and control the data quality in Neo4j by constraining the shape of your graph.

Episodes of this track:

3 – Controlling the shape of your graph with SHACL

5 – Ontology-driven Knowledge Graph construction

9 – Unsupervised KG construction. Graph Observability

11 – Graph data quality with graph expectations

18 – Easy Full-Graph Migrations from Triple Stores to Neo4j

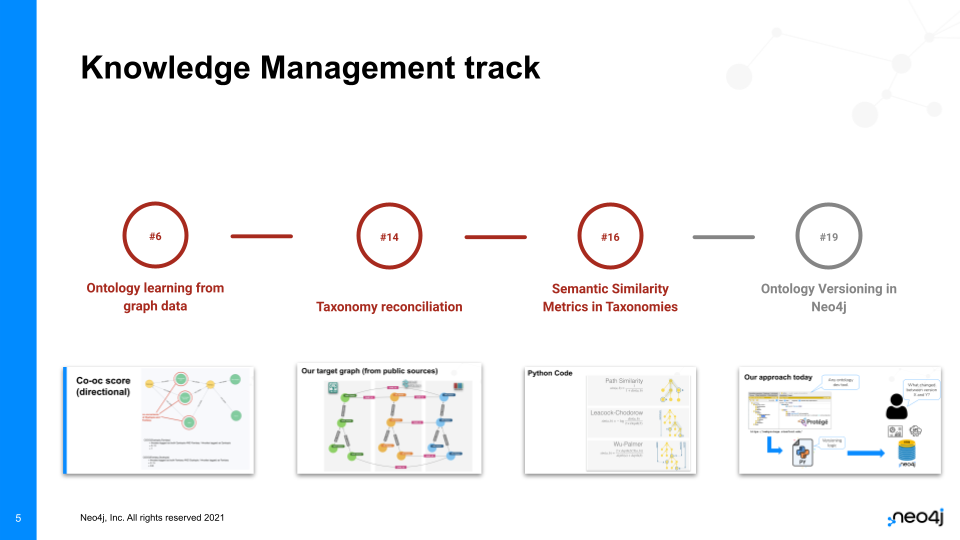

Knowledge Management Track

You’re a knowledge engineer, an ontologist, an information architect… so you care about semantics, ontologies, shared vocabularies… Ontologies give structure to your data, almost like an augmented schema. This needs some managing of these ontologies and we show examples how to do that and how to work with tools like Web Protégé. With Neo4j, you can analyse and manage your ontologies, you can visually explore them and you can analyse them structurally to detect inconsistencies or misalignments between them.

Episodes of this track:

6 – Ontology learning from graph data

14 – Taxonomy reconciliation

16 – Semantic Similarity Metrics in Taxonomies

19 – Ontology Versioning in Neo4j

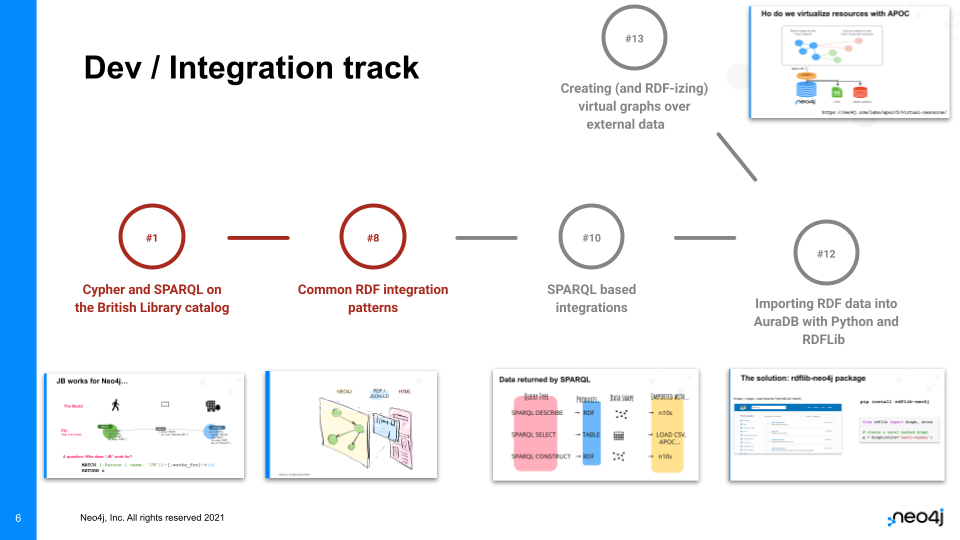

Developer / Data Integration Track

This the interoperability/integration track. Think of it as the engine room needed for your semantics or knowledge graph project. We show how different tools work together and how to best set up these processes. So it is only logical, that we cover how to import RDF data, using SPARQL or other endpoints, into a graph in this track as well.

Episodes in this track:

1 – Cypher and SPARQL on the British Library catalog

8 – Common RDF integration patterns

10 – SPARQL based integrations

12 – Importing RDF data into AuraDB with Python and RDFLib

13 – Creating (and RDF-izing) virtual graphs over external data

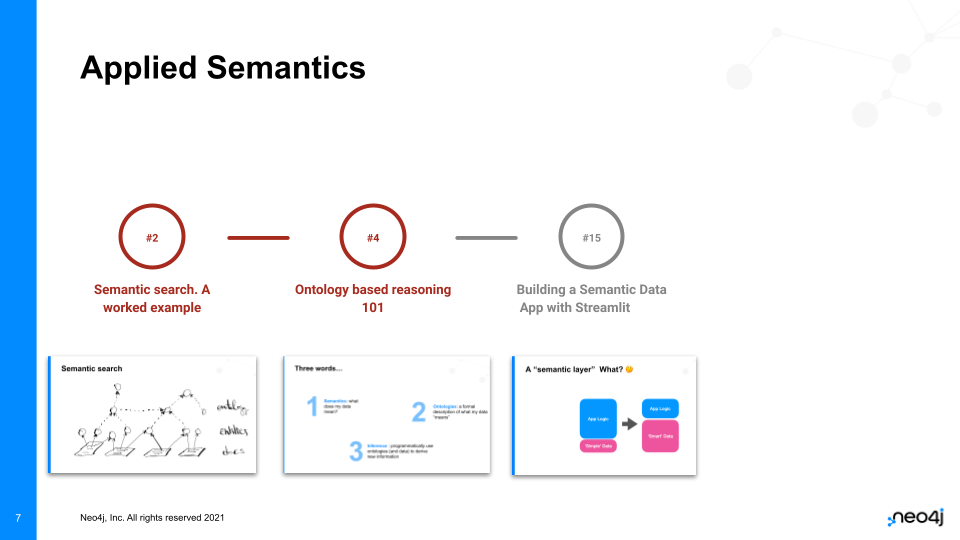

Applied Semantics Track

This could almost be understood as a follow-up to the Knowledge Management Track. You have data and ontologies in your graph — now what? Well, now you can automate inferences based on the data and ontologies to build intelligent applications. We show a few examples of this in this track.

Episodes in this track:

2 – Semantic search. A worked example

4 – Ontology based reasoning 101

15 – Building a Semantic Data App with Streamlit

Interesting Links

Jesús Barrasa: https://twitter.com/barrasadv

Going Meta Episodes: https://development.neo4j.dev/video/going-meta-a-series-on-graphs-semantics-and-knowledge/

Github Repo: https://github.com/jbarrasa/goingmeta

neosemantics: https://development.neo4j.dev/labs/neosemantics/

eBook Building Knowledge Graphs: A Practitioner’s Guide: https://development.neo4j.dev/knowledge-graphs-practitioners-guide/

20 Episodes of Going Meta — A Recap was originally published in Neo4j Developer Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.