Implementing Advanced Retrieval RAG Strategies With Neo4j

Graph ML and GenAI Research, Neo4j

6 min read

These days, you can deploy retrieval-augmented generation (RAG) applications in just a few minutes. Most RAG applications like Chat with Your PDF use basic vector similarity search to retrieve relevant information from the database and feed it to the LLM to generate a final response.

However, basic vector search is not robust enough to handle all use cases. The vector similarity search process only compares the semantic content of items based on the words and concepts they share, without regard for other aspects of the data. A RAG application based on basic vector search will not integrate context from your data structure for advanced reasoning.

In this blog post, we’ll walk you through advanced methods to build a RAG application that draws on data context to answer complex, multi-part questions. You’ll learn how to use the neo4j-advanced-rag template and host it using LangServe.

Neo4j Environment Setup

You need to set up Neo4j 5.11 or later to follow along with the examples in this blog post. The easiest way is to start a free instance on Neo4j Aura, which offers cloud instances of the Neo4j database. Alternatively, you can set up a local instance of the Neo4j database by downloading the Neo4j Desktop application and creating a local database instance.

from langchain.graphs import Neo4jGraph

url = "neo4j+s://databases.neo4j.io"

username ="neo4j"

password = ""

graph = Neo4jGraph(

url=url,

username=username,

password=password

)

Advanced RAG Strategies

Step-back approach to prompting emerged as a way to tackle the limitations of basic vector search in RAG. The step-back approach to prompting emphasizes the importance of taking a step back from the details of a task to focus on the broader conceptual framework:

The step-back prompting technique recognizes that immediately focusing on the details of complex tasks can lead to errors. Instead of plunging straight into the complexities, the model first asks and answers a general question about the fundamental principle or concept behind a query. With this foundational knowledge, the model can more accurately reason out answers.

Parent Document Retrievers

Parent document retrievers have emerged as the solution based on the hypothesis that directly using a document’s vector might be inefficient.

Large documents can be split into smaller chunks, which are converted to vectors, improving indexing for similarity searches. Although these smaller vectors better represent specific concepts, the original large document is retrieved as it provides better context for answers.

Similarly, you can use an LLM to generate questions the document answers. The document is then indexed by these question embeddings, providing increased similarity to user questions. In both examples, the full parent document is retrieved to provide complete context, hence the name “parent document retriever.”

Available Strategies

1. Typical RAG

Traditional method where the exact data indexed is the data retrieved.

2. Parent Retriever

Instead of indexing entire documents, data is divided into smaller chunks, referred to as “parent” and “child” documents. Child documents are indexed for better representation of specific concepts, while parent documents are retrieved to ensure context retention.

3. Hypothetical Questions

Documents are processed to generate potential questions they might answer. These questions are then indexed for better representation of specific concepts, while parent documents are retrieved to ensure context retention.

4. Summaries

Instead of indexing the entire document, a summary of the document is created and indexed. Similarly, the parent document is retrieved in a RAG application.

Implementing RAG Strategies With LangChain Templates

To use LangChain templates, you need to first install the LangChain CLI:

pip install -U "langchain-cli[serve]"

Retrieving the LangChain template is then as simple as executing the following line of code:

langchain app new my-app --package neo4j-advanced-rag

This code will create a new folder called my-app, and store all the relevant code in it. Think of it as a “git clone” equivalent for LangChain templates. This will construct the following structure in your filesystem.

Two top-level folders are created:

- App: stores the FastAPI server code.

- Packages: stores all the templates that you selected to use in this application. Remember, you can use multiple templates in a single application.

Every template is a standalone project with its own poetry file, readme, and potentially also an ingest script, which you can use to populate the database. In the neo4j-advanced-rag template, the ingest script will construct a small graph based on the information from the Dune Wikipedia page. Before running, you need to make sure to add relevant environment variables:

export OPENAI_API_KEY=sk-..

export NEO4J_USERNAME=neo4j

export NEO4J_PASSWORD=password

export NEO4J_URI=bolt://localhost:7687

Make sure to change the environment variables to appropriate values. Then, you can run the ingest script with the following command.

python ingest.py

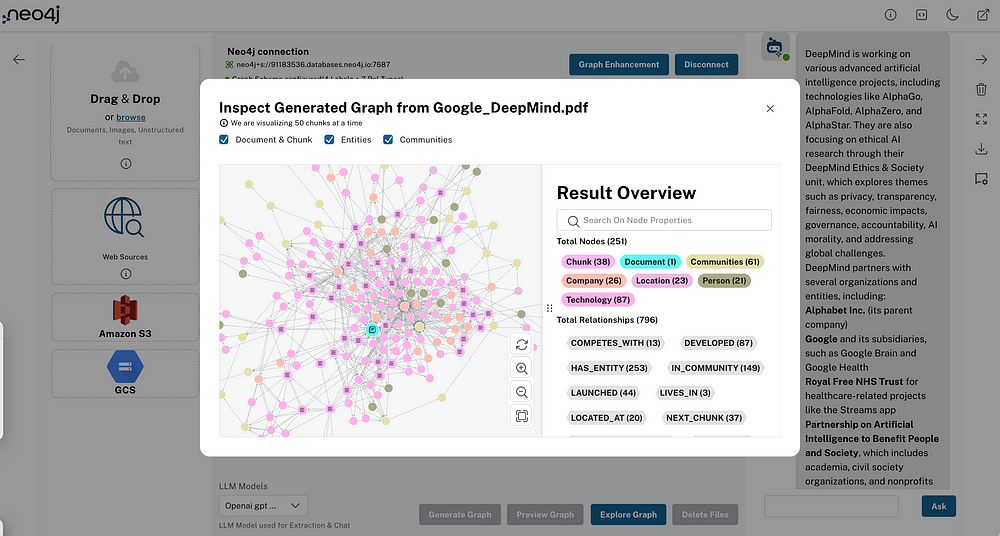

The ingest can take a minute as we use the LLM to generate hypothetical questions and summaries. If you inspect the generated graph in Neo4j Browser, you should get a similar visualization:

The purple nodes are the parent documents, which have a length of 512 tokens. Each parent document has multiple child nodes (orange) that contain a subsection of the parent document. Additionally, the parent nodes have potential questions represented as blue nodes and a single summary node in red.

As we have all the data needed for different strategies in a single store, we can easily compare the results of using different advanced retrieval strategies in the Playground application. You need to change the server.py to include the neo4j-advanced-rag template as an endpoint:

from fastapi import FastAPI

from langserve import add_routes

from neo4j_advanced_rag import chain as neo4j_advanced_chain

app = FastAPI()

# Add this

add_routes(app, neo4j_advanced_chain, path="/neo4j-advanced-rag")

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)

You can now serve this template by executing the following line of code in the root application directory.

langchain serve

Finally, you can open the playground application in your browser and compare different advanced RAG retrieval approaches.

LangServe Playground offers a nice user interface to test out and inspect various LangChain templates. For example, you can expand the “intermediate steps” and inspect which documents were passed to the LLM, what’s in the prompt, and all the other details of a chain.

Since the strategy can be selected in the dropdown menu, you can easily compare how the output differs based on the selected retrieval strategy (or inspect documents in the intermediate steps section):

Even with such a tiny dataset (17 documents of 512 tokens), we found examples where the typical RAG retrieval might fail. It’s important to learn advanced retrieval RAG strategies and implement them into your application for a good user experience.

Advanced RAG for Better Accuracy and Context

In today’s RAG applications, the ability to retrieve accurate and contextual information from a large text corpus is crucial. The traditional approach to vector similarity search, while powerful, sometimes overlooks the specific context when longer text is embedded.

By splitting longer documents into smaller vectors and indexing them for similarity, we increase retrieval accuracy while retaining the contextual information of parent documents to generate the answers. Similarly, we can use the LLM to generate hypothetical questions or summaries of text. Then, the LLM indexes those questions and summaries for a better representation of specific concepts while still returning the information from the parent document.

Test it out, and let us know how it goes!

Learning Resources

To learn more about building smarter LLM applications with knowledge graphs, check out the other posts in this blog series.