Knowledge Graphs & LLMs: Multi-Hop Question Answering

Graph ML and GenAI Research, Neo4j

9 min read

Retrieval-augmented generation (RAG) applications excel at answering simple questions by integrating data from external sources into LLMs. But they struggle to answer multi-part questions that involve connecting the dots between associated pieces of information. That’s because RAG applications require a database designed to store data in a way that makes it easy to find all the pieces needed to answer these types of questions.

Knowledge graphs are well-suited for handling complex, multi-part questions because they store data as a network of nodes and the relationship between them. This connected data structure allows RAG apps to navigate from one piece of information to another efficiently, accessing all related information. The technique of combining RAG with knowledge graphs is known as GraphRAG.

Building a RAG app with a knowledge graph improves query efficiency, especially when you’re dealing with connected data, and you can dump any type of data (structured and unstructured) into the graph without having to re-design the schema.

This blog post explores:

- The inner workings of RAG applications

- Knowledge graphs as an efficient information storage solution

- Combining graph and textual data for enhanced insights

- Applying chain-of-thought question-answering techniques

How RAG Works

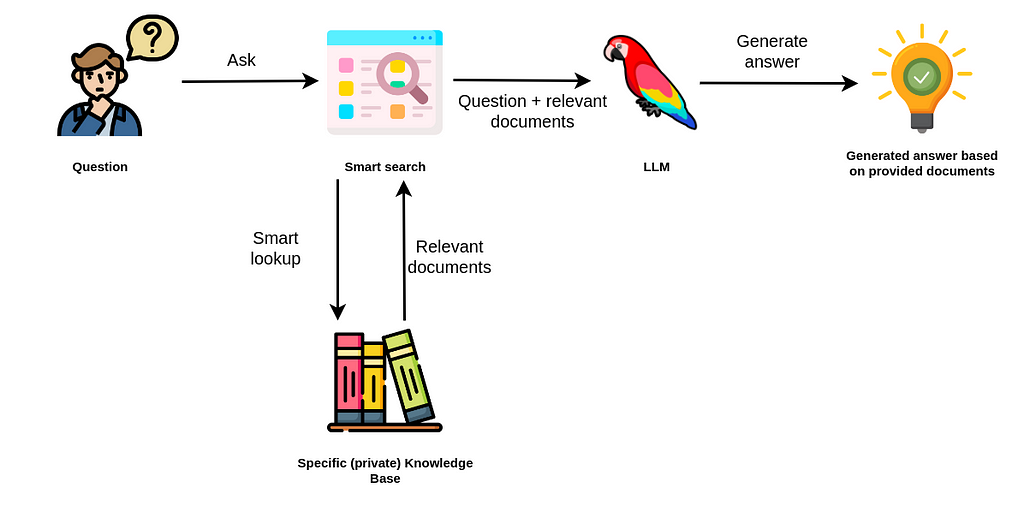

Retrieval augmented generation (RAG) is a technique that enhances the responses of LLMs by retrieving relevant information from external databases and incorporating it into the generated output.

The RAG process is simple. When a user asks a question, an intelligent search tool looks for relevant information in the provided databases:

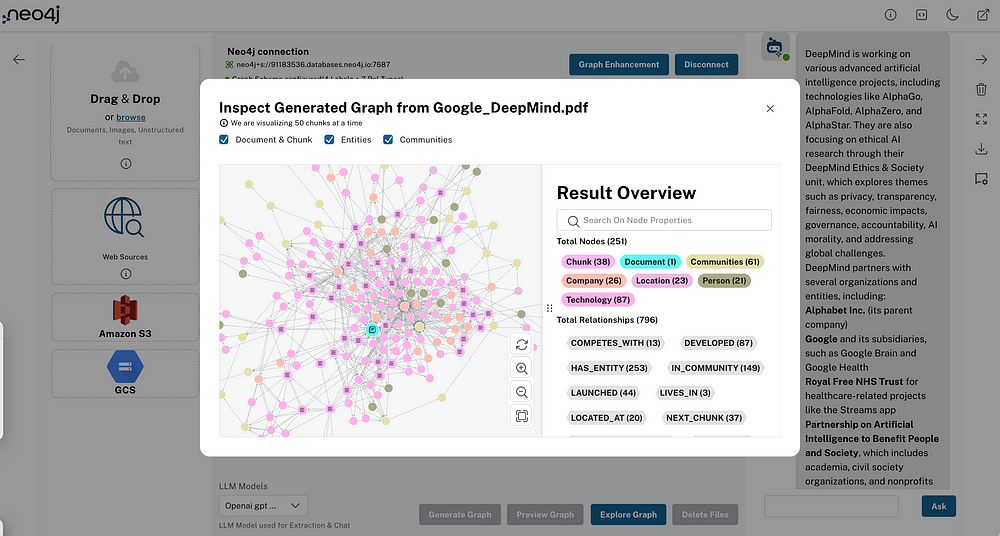

You’ve likely used tools like Chat with Your PDF that search for information in a provided document. Most of those tools use vector similarity search to identify the chunks of text that contain data similar to a user’s question. The implementation is straightforward, as shown in the diagram below.

The PDFs (or other document types) are first split into multiple chunks of text. You can use different strategies depending on how large the text chunks should be or if there should be any overlap between them. Then, the RAG application uses a text embedding model to generate vector representations of text chunks.

That’s all the preprocessing required to perform a vector similarity search at query time. Finally, the RAG encodes the user input as a vector at query time and uses similarity algorithms like cosine to compare the distance between the user input and the embedded text chunks.

Normally, the RAG returns the three most similar documents to provide context to the LLM, which enhances its ability to generate accurate answers. This approach works fairly well when the vector search can identify relevant chunks of text.

However, a simple vector similarity search might not be sufficient when the LLM needs information from multiple documents or even multiple chunks of text to generate an answer. For example, consider the question:

Did any of the former OpenAI employees start their own company?

This question is multi-part in that it contains two questions:

- Who are the former employees of OpenAI?

- Did any of them start their own company?

Answering these types of questions is a multi-hop question-answering task, where a single question can be broken down into multiple sub-questions, and getting an accurate answer requires retrieval of numerous documents.

Simply chunking and embedding documents in a database and then using plain vector similarity search won’t hit the mark for multi-hop questions. Here’s why:

- Repeated information in top N documents: The provided documents aren’t guaranteed to contain all the information required to answer a question fully. For example, the top three similar documents might all mention that Shariq worked at OpenAI and possibly founded a company while ignoring all the other former employees who became founders.

- Missing reference information: Depending on the chunk sizes, some chunks might not contain the full context or references to the entities mentioned in the text. Overlapping the chunks can partially mitigate the issue of missing references. There are also examples where the references point to another document, so you need a co-reference resolution or preprocessing technique.

- Hard to define the ideal N number of retrieved documents: Some questions require more documents for an LLM to be accurate, while in other situations, a large number of documents would only increase the noise (and cost).

In some instances, the similarity search will return duplicated information while other relevant information is ignored due to a low K number of retrieved information or embedding distance.

It’s clear that plain vector similarity search falls short for multi-hop questions. But we can employ multiple strategies to answer multi-hop questions that require information from various documents.

Knowledge Graph as Condensed Information Storage

If you’ve paid close attention to the LLM space, you’ve likely seen techniques that condense information to make it more accessible at query time. For example, you could use an LLM to provide a summary of documents and then embed and store the summaries instead of the actual documents. Using this approach, you remove a lot of noise, get better results, and worry less about prompt token space.

You can also perform contextual summarization at ingestion or during query time. Contextual compression during query time is more guided because it picks the context relevant to the provided question. But the heavier the workload during the query, the worse you can expect the user latency to be. We recommend moving as much of the workload to ingestion time as possible to improve latency and avoid other runtime issues.

The same approach can be applied to summarize conversation history to avoid running into token limit problems.

I haven’t seen any articles about combining and summarizing multiple documents as a single record. There are probably too many combinations of documents that we could merge and summarize, making it too costly to process all the combinations of documents at ingestion time. Knowledge graphs overcome this problem.

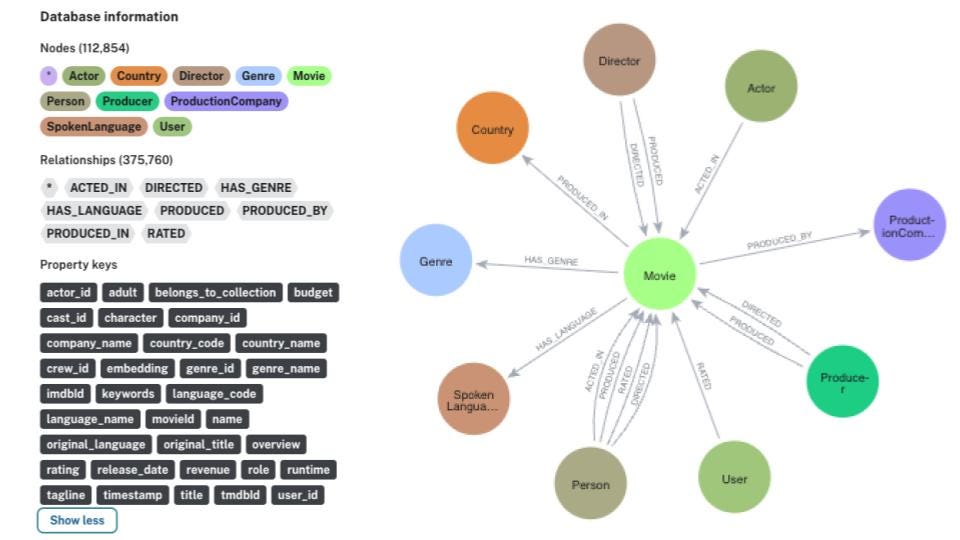

The information extraction pipeline has been around for some time. It’s the process of extracting structured information from unstructured text, often in the form of entities and relationships. The beauty of combining it with knowledge graphs is that you can process each document individually. When the knowledge graph is constructed or enriched, the information from different records gets connected.

The knowledge graph uses nodes and relationships to represent data. In this example, the first document provided the information that Dario and Daniela used to work at OpenAI, while the second document offered information about their Anthropic startup. Each record was processed individually, but the knowledge graph representation connects the data, making it easy to answer questions that span multiple documents.

Most of the newer LLM approaches to answering multi-hop questions focus on solving the task at query time. The truth is many multi-hop question-answering issues can be solved by preprocessing data before ingestion and connecting it to a knowledge graph. You can use LLMs or custom text domain models to perform the information extraction pipeline.

To retrieve information from the knowledge graph at query time, we have to construct an appropriate Cypher statement. Luckily, LLMs are pretty good at translating natural language to the Cypher graph query language.

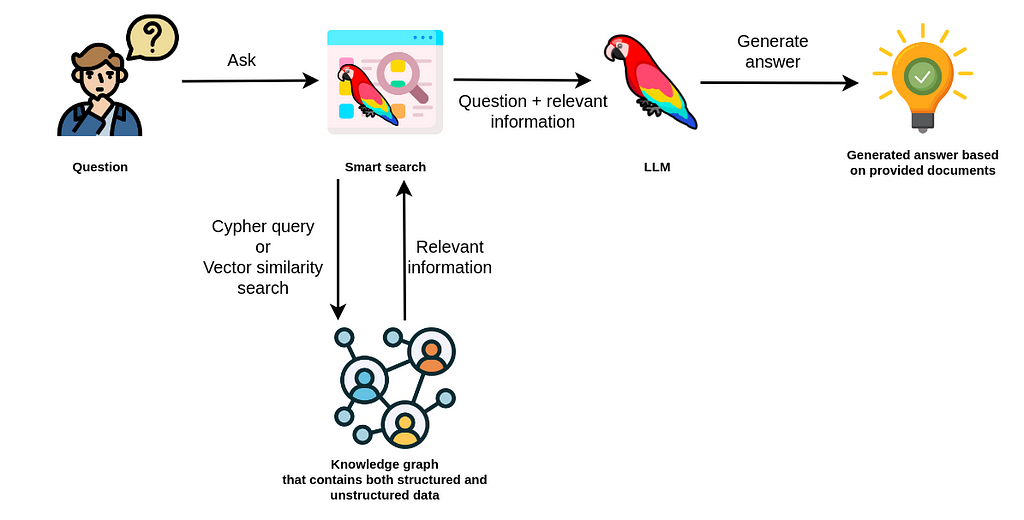

The smart search uses an LLM to generate an appropriate Cypher statement to retrieve information from a knowledge graph. The information is then passed to another LLM call, which uses the original question and the provided information to generate an answer. In practice, you can use different LLMs for generating Cypher statements and answers, or you can use various prompts on a single LLM.

Combining Graph and Textual Data

Sometimes, you’ll need to combine graph and textual data to find relevant information. For example, consider this question:

What’s the latest news about the founders of Prosper Robotics?

In this example, you’d want the LLM to identify the Prosper Robotics founders using the knowledge graph structure and then retrieve recent articles that mention them.

In the knowledge graph, you’d start from the Prosper Robotics node, traverse to its founders, and then retrieve the latest articles that mention them.

A knowledge graph represents structured information about entities and their relationships, as well as unstructured text as node properties. You can also use natural language techniques like named entity recognition to connect unstructured information to relevant entities in the knowledge graph, as shown by the MENTIONS relationship.

When a knowledge graph contains structured and unstructured data, the smart search tool can use Cypher queries or vector similarity search to retrieve relevant information. In some cases, you can also use a combination of the two. For example, you can start with a Cypher query to identify relevant documents and then apply vector similarity search to find specific information within those documents.

Using Knowledge Graphs in Chain-of-Thought Flow

Another fascinating development around LLMs is the chain-of-thought question answering, especially with LLM agents.

LLM agents can separate questions into multiple steps, define a plan, and draw from any of the provided tools to generate an answer. Typically, the agent tools consist of APIs or knowledge bases that the agent can query to retrieve additional information.

Let’s again consider the same question:

What’s the latest news about the founders of Prosper Robotics?

Suppose you don’t have explicit connections between articles and the entities they mention, or the articles and entities are in different databases. An LLM agent using a chain-of-thought flow would be very helpful in this case. First, the agent would separate the question into sub-questions:

- Who is the founder of Prosper Robotics?

- What’s the latest news about the founder?

Now the agent can decide which tool to use. Let’s say it’s grounded on a knowledge graph, which means it can retrieve structured information, like the name of the founder of Prosper Robotics.

The agent discovers that the founder of Prosper Robotics is Shariq Hashme. Now the agent can rewrite the second question with the information from the first question:

- What’s the latest news about Shariq Hashme?

The agent can use a range of tools to produce an answer, including knowledge graphs, vector databases, APIs, and more. The access to structured information allows LLM applications to perform analytics workflows where aggregation, filtering, or sorting is required. Consider these questions:

- Which company with a solo founder has the highest valuation?

- Who founded the most companies?

Plain vector similarity search has trouble answering these analytical questions since it searches through unstructured text data, making it hard to sort or aggregate data.

While chain-of-thought demonstrates the reasoning capabilities of LLMs, it’s not the most user-friendly technique since response latency can be high due to multiple LLM calls. But we’re still very excited to understand more about integrating knowledge graphs into chain-of-thought flows for many use cases.

Why Use a Knowledge Graph for RAG Applications

RAG applications often require retrieving information from multiple sources to generate accurate answers. While text summarization can be challenging, representing information in a graph format has several advantages.

By processing each document separately and connecting them in a knowledge graph, we can construct a structured representation of the information. This approach allows for easier traversal and navigation through interconnected documents, enabling multi-hop reasoning to answer complex queries. Also, constructing the knowledge graph during the ingestion phase reduces workload during query time, which improves latency.

RAG applications will increasingly use both structured and unstructured data to generate more accurate answers. Knowledge graphs can easily absorb all types of data. This flexibility makes it suitable for a wide range of use cases and LLM applications, especially those involving relationships between entities (think fraud detection, supply chain, master data management, etc).

Read through our documentation on this project on the GitHub repository.

Learning Resources

To learn more about building smarter LLM applications with knowledge graphs, check out the other posts in this blog series.

- LangChain Library Adds Full Support for Neo4j Vector Index

- Implementing Advanced Retrieval RAG Strategies With Neo4j

- Harnessing Large Language Models With Neo4j

- Construct Knowledge Graphs From Unstructured Text

- Using a Knowledge Graph to Implement a RAG Application

- The Developer’s Guide: How to Build a Knowledge Graph