Neo4j Live: Taming LLM Hallucinations for Medical Q&A with Neo4j

In high-stakes domains like healthcare, precision and factual accuracy are non-negotiable. Yet even the most capable large language models (LLMs) have a well-known weakness: hallucinations. To mitigate these risks, a new generation of Retrieval-Augmented Generation (RAG) pipelines is emerging: GraphRAG, built not only on vector search but also on structured graph data.

You can watch the full session above.

In this Neo4j Live session, AI engineer and consultant Quinten Rosseel walked us through “Mina”, a system designed to help medical professionals manage patient questions using grounded GraphRAG. The goal is to ensure reliable answers while preserving efficiency and multilingual reach.

The Use Case: Q&A for Medical Procedures

The Mina system emerged from a real-world problem in Belgian hospitals: patients have important post-operative questions, like “When can I return to work?” or “Can I go golfing after my cataract surgery?”, but the official documentation often doesn’t cover these specifics.

This leads to email and phone backlogs, delays and uncertainty. Quinten partnered with practising doctors to build a lightweight, safe and effective assistant that helps answer routine questions while deferring unclear ones to medical staff.

The design goals were:

- Support nuanced, personalised questions across multiple languages

- Avoid hallucinations and clearly distinguish validated from unvalidated answers

- Be lightweight enough for busy doctors and safe enough to be trusted

Why LLMs Alone Aren’t Enough

While LLMs can generate human-like answers, their tendency to hallucinate is a serious liability in the medical domain. Patients acting on inaccurate information may face harmful outcomes, and doctors rightly demand better.

Quinten outlined two major causes of hallucination:

- Context confusion: The LLM can’t always distinguish between user input and generated text in its prompt window, especially when fine-tuned for conversation.

- Misalignment: Reinforcement learning with human feedback may train the model to “sound” helpful rather than to be strictly truthful.

To avoid this, Mina uses a layered approach: it generates answers only from verified knowledge and lets the LLM structure responses, not invent them.

Enter the Graph: Why Neo4j?

The system stores all validated question-answer pairs in a Neo4j graph. But why a graph, instead of a simple vector DB or flat document store?

Graph structures offer several unique advantages:

- Expressive filtering: Filter results by metadata, tags, language, validation status and more using Cypher.

- Structural context: Answers can be linked to questions, domains or tags, allowing richer embeddings.

- Adaptability: The schema can evolve without needing rigid upfront modelling.

Quinten’s team uses Neo4j as both a graph database and a vector store, enabling hybrid retrieval strategies that blend symbolic reasoning with semantic similarity.

System Architecture: Custom RAG for Doctors

The Mina pipeline is customised per hospital or doctor. Here’s how it works:

- The user submits a question: Language detection happens in parallel.

- Semantic retrieval: Similar questions and answers are retrieved from the graph using a vector index, filtered by metadata (language, validation, etc.).

- Relevance classification: LLMs or classifiers assess whether the retrieved Q&A pairs are truly relevant.

- Answer generation: If a high-confidence match exists, the system uses the known answer. If not, it prompts the doctor or says, “I don’t know.”

- Validation loop: Doctors or assistants can validate new Q&As. These are added to the graph and indexed for future use.

This approach limits hallucination while allowing the system to learn and grow.

Indexing and Retrieval: Getting It Right

Quinten emphasised the importance of choosing the right embedding model and indexing strategy:

- Use domain-representative embeddings: Generic models like OpenAI’s text-embedding-ada-002 may not perform well in specialised domains. Open models like Nomic or G-BERT variants are worth exploring.

- Using the graph structure: Rather than embedding isolated questions, create composite texts that include contextual graph information (tags, related nodes, etc.). This improves retrieval fidelity.

- Consider matryoshka embeddings: These compact representations retain structure while enabling fast re-ranking and filtering.

Neo4j’s support for hybrid queries, combining full-text search, metadata filters, and cosine similarity, made it a strong fit for complex retrieval tasks.

GraphRAG for Post-Retrieval Reasoning

Mina also uses GraphRAG to refine results. For example:

- Boost answers connected to highly trusted doctors.

- Cluster similar answers to identify consensus.

- Expand or contract the retrieved context based on graph relationships.

This adds another layer of control and transparency to the process, which is essential when the output can impact patient decisions.

Guardrails and Evaluations

No RAG system is complete without evaluation. Mina includes several layers of assurance:

- Prompt engineering: Prompts include disclaimers and instructions like “Say ‘I don’t know’ if unsure.”

- Factual consistency checks: Output is compared to context using entailment classifiers and sampling methods.

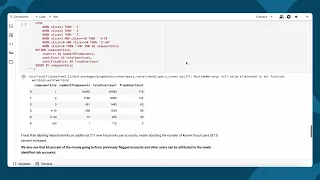

- Benchmarks: Tests use datasets like TruthfulQA and in-house validation sets to measure accuracy, MRR and top-K performance.

Graph-based reranking and GoHere integration showed measurable improvement in answer precision while reducing context size and latency.

Key Takeaways for Developers

- Use graphs for structure-aware retrieval: Neo4j lets you go beyond flat documents and encode valuable relationships.

- Combine vector search with symbolic filtering: Hybrid search yields better precision, especially in specialised domains.

- Let doctors validate the truth: Build feedback loops so trusted users can correct and expand your system.

- Design for modularity and adaptability: Different domains will require different embeddings, prompts, and filters.

- Evaluate early, iterate often: Use metrics like MRR, Top-K and entailment checks to track and improve accuracy.