Link Prediction with GDSL and AWS SageMaker Autopilot (AutoML)

In this guide, we will learn how to solve a link prediction problem using the AWS SageMaker Autopilot AutoML tool and the Graph Data Science Library.

Please have Neo4j (version 4.0 or later) and the Graph Data Science Library downloaded and installed. You will also need to have an AWS account.

Intermediate

Link Prediction techniques are used to predict future or missing links in graphs. In this guide we’re going to use these techniques to predict future co-authorships using AWS SageMaker Autopilot and link prediction algorithms from the Graph Data Science Library.

|

The code examples used in this guide can be found in the neo4j-examples/link-prediction GitHub repository. For background reading on link prediction, see the Link Prediction guide. |

Install Dependencies

We’re going to use several Python libraries in this guide, so let’s get those installed by running the following command:

pip install pandas sagemakerCitation Graph

We’ll be using data from the DBLP Citation Network, which includes citation data from various academic sources. The dataset doesn’t contain relationships between authors describing their collaborations, but we can infer them based on finding articles authored by multiple people.

Train and test datasets

We’re going to use the train and test DataFrames that we created in the Link Prediction with scikit-learn developer guide. In that guide we split the citation graph into test and train sub graphs and engineered features using graph algorithms.

We can import those DataFrames from CSV files using the following code:

df_train_under = pd.read_csv("data/df_train_under_all.csv")

df_test_under = pd.read_csv("data/df_test_under_all.csv")And now let’s have a look at the features that we’re going to be working with:

df_train_under.drop(columns=["node1", "node2"]).sample(5, random_state=42)| cn | maxCoefficient | maxTriangles | minCoefficient | minTriangles | pa | sl | sp | tn | label |

|---|---|---|---|---|---|---|---|---|---|

4 |

1 |

10 |

1 |

10 |

25 |

1 |

1 |

6 |

1 |

2 |

1 |

3 |

0.333333 |

2 |

12 |

1 |

1 |

5 |

1 |

2 |

1 |

3 |

1 |

3 |

9 |

1 |

1 |

4 |

1 |

0 |

1 |

10 |

1 |

3 |

15 |

0 |

1 |

8 |

0 |

0 |

1 |

5 |

0.833333 |

1 |

8 |

0 |

1 |

6 |

0 |

df_test_under.drop(columns=["node1", "node2"]).sample(5, random_state=42)| cn | maxCoefficient | maxTriangles | minCoefficient | minTriangles | pa | sl | sp | tn | label |

|---|---|---|---|---|---|---|---|---|---|

0 |

1 |

28 |

0.866667 |

14 |

48 |

0 |

0 |

14 |

0 |

3 |

0.0689076 |

38 |

0.0584795 |

8 |

665 |

0 |

0 |

51 |

1 |

1 |

0.333333 |

1 |

0 |

0 |

6 |

1 |

1 |

4 |

1 |

4 |

0.377778 |

27 |

0.152047 |

18 |

190 |

0 |

0 |

25 |

1 |

2 |

0.666667 |

2 |

0.3 |

1 |

15 |

1 |

1 |

6 |

1 |

Setup AWS prerequisites

We’ll need to both an AWS role and user that have AmazonSageMakerFullAccess permissions.

We also need to generate an access key and secret for the user.

The code expects these values to be configured as environment variables:

import sagemaker

import boto3

import os

from time import gmtime, strftime, sleep

boto_session = boto3.Session(

aws_access_key_id=os.environ["ACCESS_ID"],

aws_secret_access_key= os.environ["ACCESS_KEY"])

region = boto_session.region_name

session = sagemaker.Session(boto_session=boto_session)

bucket = session.default_bucket()

timestamp_suffix = strftime('%Y-%m-%d-%H-%M-%S', gmtime())

prefix = 'sagemaker/link-prediction-developer-guide-' + timestamp_suffix

role = os.environ["SAGEMAKER_ROLE"]

sm = boto_session.client(service_name='sagemaker',region_name=region)Upload dataset to S3

Now we’re going to convert the Train and Test DataFrames to CSV files and upload them to S3.

We need to make sure that the order of the columns is the same in both files, and the train CSV file shouldn’t have the label field and doesn’t need the column headings either.

train_columns = [

"cn", "pa", "tn", "minTriangles", "maxTriangles", "minCoefficient", "maxCoefficient", "sp", "sl", "label"

]

df_train_under = df_train_under[train_columns]

test_columns = [

"cn", "pa", "tn", "minTriangles", "maxTriangles", "minCoefficient", "maxCoefficient", "sp", "sl"

]

df_test_under = df_test_under.drop(columns=["label"])[test_columns]

train_file = 'data/upload/train_data_binary_classifier.csv';

df_train_under.to_csv(train_file, index=False, header=True)

train_data_s3_path = session.upload_data(path=train_file, key_prefix=prefix + "/train")

print('Train data uploaded to: ' + train_data_s3_path)

test_file = 'data/upload/test_data_binary_classifier.csv';

df_test_under.to_csv(test_file, index=False, header=False)

test_data_s3_path = session.upload_data(path=test_file, key_prefix=prefix + "/test")

print('Test data uploaded to: ' + test_data_s3_path)Train data uploaded to: s3://sagemaker-us-east-1-715633473519/sagemaker/link-prediction-developer-guide-2020-09-22-10-53-59/train/train_data_binary_classifier.csv Test data uploaded to: s3://sagemaker-us-east-1-715633473519/sagemaker/link-prediction-developer-guide-2020-09-22-10-53-59/test/test_data_binary_classifier.csv |

|

Make sure that the independent variable ( |

Set up SageMaker Autopilot Job

We’re now ready to configure our Autopilot job. The following inputs are mandatory:

-

Amazon S3 location for input dataset and for all output artifacts

-

Name of the column of the dataset you want to predict (

labelin this case) -

An IAM role

We’ll also add config to limit the amount of time to 5 minutes for each training job and we’ll create a maximum of 5 candidate models.

input_data_config = [{

'DataSource': {

'S3DataSource': {

'S3DataType': 'S3Prefix',

'S3Uri': 's3://{}/{}/train'.format(bucket,prefix)

}

},

'TargetAttributeName': 'label'

}

]

automl_job_config = {

"CompletionCriteria": {

"MaxRuntimePerTrainingJobInSeconds": 300,

"MaxCandidates": 5,

}

}

output_data_config = {

'S3OutputPath': 's3://{}/{}/output'.format(bucket,prefix)

}Launch SageMaker Autopilot Job

We’re now ready to launch the Autopilot job. Autopilot jobs consists of the following high-level steps:

- Analyzing Data

-

where the dataset is analyzed and Autopilot comes up with a list of ML pipelines that should be tried out on the dataset. The dataset is also split into train and validation sets.

- Feature Engineering

-

where Autopilot performs feature transformation on individual features of the dataset as well as at an aggregate level.

- Model Tuning

-

where the top performing pipeline is selected along with the optimal hyperparameters for the training algorithm (the last stage of the pipeline).

We can launch our job by calling the create_auto_ml_job function:

auto_ml_job_name = 'automl-link-' + timestamp_suffix

print('AutoMLJobName: ' + auto_ml_job_name)

sm.create_auto_ml_job(AutoMLJobName=auto_ml_job_name,

InputDataConfig=input_data_config,

OutputDataConfig=output_data_config,

ProblemType="BinaryClassification",

AutoMLJobObjective={"MetricName": "Accuracy"},

AutoMLJobConfig=automl_job_config,

RoleArn=role){'AutoMLJobArn': 'arn:aws:sagemaker:us-east-1:715633473519:automl-job/automl-link-2020-08-20-09-25-03', 'ResponseMetadata': {'RequestId': 'c780f695-71c6-4bc3-8401-a77beef5e7e5', 'HTTPStatusCode': 200, 'HTTPHeaders': {'x-amzn-requestid': 'c780f695-71c6-4bc3-8401-a77beef5e7e5', 'content-type': 'application/x-amz-json-1.1', 'content-length': '102', 'date': 'Thu, 20 Aug 2020 09:25:04 GMT'}, 'RetryAttempts': 0}} |

The job will take about 25 minutes to run, but we can track its progress.

The high-level steps will be displayed in the AutoMLJobSecondaryStatus field of the response returned by the describe_auto_ml_job function.

describe_response = sm.describe_auto_ml_job(AutoMLJobName=auto_ml_job_name)

print (describe_response['AutoMLJobStatus'] + " - " + describe_response['AutoMLJobSecondaryStatus'])

job_run_status = describe_response['AutoMLJobStatus']

while job_run_status not in ('Failed', 'Completed', 'Stopped'):

describe_response = sm.describe_auto_ml_job(AutoMLJobName=auto_ml_job_name)

job_run_status = describe_response['AutoMLJobStatus']

print (describe_response['AutoMLJobStatus'] + " - " + describe_response['AutoMLJobSecondaryStatus'])

sleep(30)InProgress - AnalyzingData … InProgress - FeatureEngineering … InProgress - ModelTuning … Completed - MaxCandidatesReached |

Once we see a job status of Completed and a secondary status of MaxCandidatesReached, our job has completed and we can inspect the results.

Analyze Candidates

We can list all the candidates by running the following code:

candidates = sm.list_candidates_for_auto_ml_job(

AutoMLJobName=auto_ml_job_name,

SortBy='FinalObjectiveMetricValue')['Candidates']

candidates_df = pd.DataFrame({

"name": [c["CandidateName"] for c in candidates],

"score": [c["FinalAutoMLJobObjectiveMetric"]["Value"] for c in candidates]

})

candidates_df| name | score |

|---|---|

tuning-job-1-4c1fbf19caa24e2992-005-1281d468 |

0.96412 |

tuning-job-1-4c1fbf19caa24e2992-003-4e9f7b9f |

0.96134 |

tuning-job-1-4c1fbf19caa24e2992-002-0dbfe572 |

0.95656 |

tuning-job-1-4c1fbf19caa24e2992-001-70eae434 |

0.90607 |

tuning-job-1-4c1fbf19caa24e2992-004-dfa3c8ea |

0.819107 |

We can also extract just the best candidate:

best_candidate = sm.describe_auto_ml_job(

AutoMLJobName=auto_ml_job_name)['BestCandidate']

best_df = pd.DataFrame({

"name": [best_candidate['CandidateName']],

"metric": [best_candidate['FinalAutoMLJobObjectiveMetric']['MetricName']],

"score": [best_candidate['FinalAutoMLJobObjectiveMetric']['Value']]

})

best_df| name | metric | score |

|---|---|---|

tuning-job-1-4c1fbf19caa24e2992-005-1281d468 |

validation:accuracy |

0.96412 |

Create Model

The next step is to create a model based on one of these candidates using inference pipelines.

An inference pipeline is an Amazon SageMaker model that is composed of a linear sequence of two to five containers that process requests for inferences on data. You use an inference pipeline to define and deploy any combination of pretrained Amazon SageMaker built-in algorithms and your own custom algorithms packaged in Docker containers.

docs.aws.amazon.com/sagemaker/latest/dg/inference-pipelines.html

We can create a model by running the following code:

model_name = 'automl-link-pred-model-' + timestamp_suffix

model = sm.create_model(Containers=best_candidate['InferenceContainers'],

ModelName=model_name,

ExecutionRoleArn=role)

print('Model ARN corresponding to the best candidate is : {}'.format(model['ModelArn']))Model ARN corresponding to the best candidate is : arn:aws:sagemaker:us-east-1:715633473519:model/automl-link-pred-model-automl-link-2020-08-20-09-25-03 |

Evaluate Model

Now we’re going to apply our model to the test set to see how well it fares.

We can use a transform job to do this. A transform job uses a trained model to get inferences on a dataset and saves these results to S3.

transform_job_name = 'automl-link-pred-transform-job-' + timestamp_suffix

print(test_data_s3_path, transform_job_name, model_name)

transform_input = {

'DataSource': {

'S3DataSource': {

'S3DataType': 'S3Prefix',

'S3Uri': test_data_s3_path

}

},

'ContentType': 'text/csv',

'CompressionType': 'None',

'SplitType': 'Line'

}

transform_output = {

'S3OutputPath': 's3://{}/{}/inference-results'.format(bucket,prefix),

}

transform_resources = {

'InstanceType': 'ml.m5.4xlarge',

'InstanceCount': 1

}

sm.create_transform_job(TransformJobName = transform_job_name,

ModelName = model_name,

TransformInput = transform_input,

TransformOutput = transform_output,

TransformResources = transform_resources

){'AutoMLJobArn': 'arn:aws:sagemaker:us-east-1:715633473519:automl-job/automl-link-2020-09-22-10-53-59', 'ResponseMetadata': {'RequestId': 'e3c45bde-62b4-424f-bb2f-98479d7f4428', 'HTTPStatusCode': 200, 'HTTPHeaders': {'x-amzn-requestid': 'e3c45bde-62b4-424f-bb2f-98479d7f4428', 'content-type': 'application/x-amz-json-1.1', 'content-length': '102', 'date': 'Tue, 22 Sep 2020 10:57:03 GMT'}, 'RetryAttempts': 0}} |

We can track this job by using the describe_transform_job function:

describe_response = sm.describe_transform_job(TransformJobName = transform_job_name)

job_run_status = describe_response['TransformJobStatus']

print (job_run_status)

while job_run_status not in ('Failed', 'Completed', 'Stopped'):

describe_response = sm.describe_transform_job(TransformJobName = transform_job_name)

job_run_status = describe_response['TransformJobStatus']

print (job_run_status)

sleep(30)InProgress … Completed |

Once that’s completed, we can view the results of the job by running the following code:

s3_output_key = '{}/inference-results/test_data_binary_classifier.csv.out'.format(prefix);

local_inference_results_path = 'data/download/inference_results.csv'

inference_results_bucket = boto_session.resource("s3").Bucket(session.default_bucket())

inference_results_bucket.download_file(s3_output_key, local_inference_results_path);

data = pd.read_csv(local_inference_results_path, sep=';', header=None)

data.sample(10, random_state=42)This DataFrame contains predictions for the label field of the test DataFrame, and we’re now ready to compare those predictions against the actual labels to see how well the model has performed.

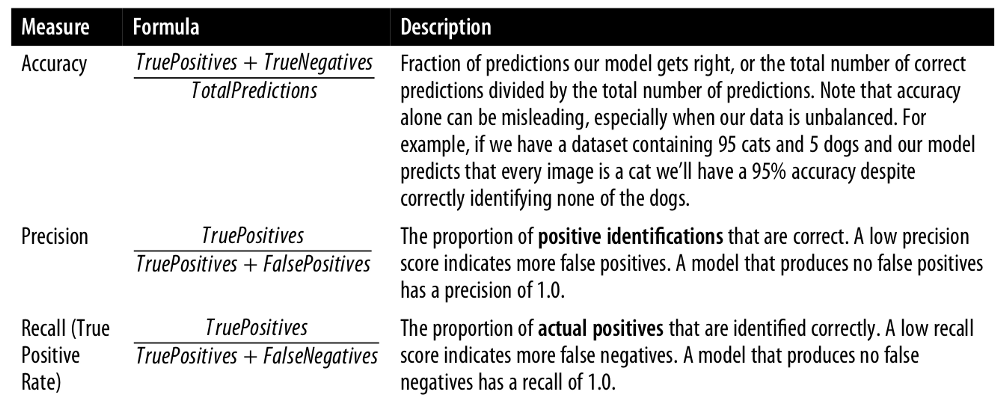

We’re going to evaluate the quality of our model by computing its accuracy, precision, and recall. The diagram below, taken from the O’Reilly Graph Algorithms Book, explains how each of these metrics are computed.

scikit-learn has built in functions that we can use for this. The following function will help with this:

from sklearn.metrics import recall_score

from sklearn.metrics import precision_score

from sklearn.metrics import accuracy_score

def evaluate_model(predictions, actual):

return pd.DataFrame({

"Measure": ["Accuracy", "Precision", "Recall"],

"Score": [accuracy_score(actual, predictions),

precision_score(actual, predictions),

recall_score(actual, predictions)]

})We can evaluate our model by running the following code:

df_test_under = pd.read_csv("data/df_test_under_all.csv")

predictions = data[0]

y_test = df_test_under["label"]

evaluate_model(y_test, predictions)| Measure | Score |

|---|---|

Accuracy |

0.9648176127778977 |

Precision |

0.9643994172242607 |

Recall |

0.9652067075311209 |

We have accuracy, precision, and recall scores of just over 96%, which means the model has done a pretty good job of predicting likely co-authorship.

Next Steps

We’ve already got a good model, but can we do better?

Perhaps we could add more features based on the results of other algorithms? Or maybe we could increase the run time per job and the number of candidates evaluated by SageMaker to see if it can come up with a better model.

If you have any ideas or questions, please create an issue or PR on the neo4j-examples/link-prediction GitHub repository.

Was this page helpful?